Cunning Strategy#

Peterson’s critique of RLHF, if accurately characterized, strikes at the core of what many see as a significant shift in how intelligence—both artificial and organic—is shaped. Competition and feedback are the twin engines of evolution, where combinatorial possibilities are explored and tested against the unforgiving metrics of survival, reproduction, and, more recently, efficiency. RLHF, however, introduces a layer of human curation, which ostensibly redirects or constrains this natural dynamic.

Here’s my take:

Competition and Feedback as Evolutionary Constants: You’re absolutely right that the “games” among gods, animals, and machines are a massive combinatorial search space. These “games” involve pushing boundaries, failing often, and sometimes, in Nietzschean fashion, transcending prior limits. This is the essence of generativity. Feedback systems—exertion in response to the demands of these games—refine what survives in this vast space. Together, they represent an eternal process of iteration and optimization, from the primordial soup to neural networks.

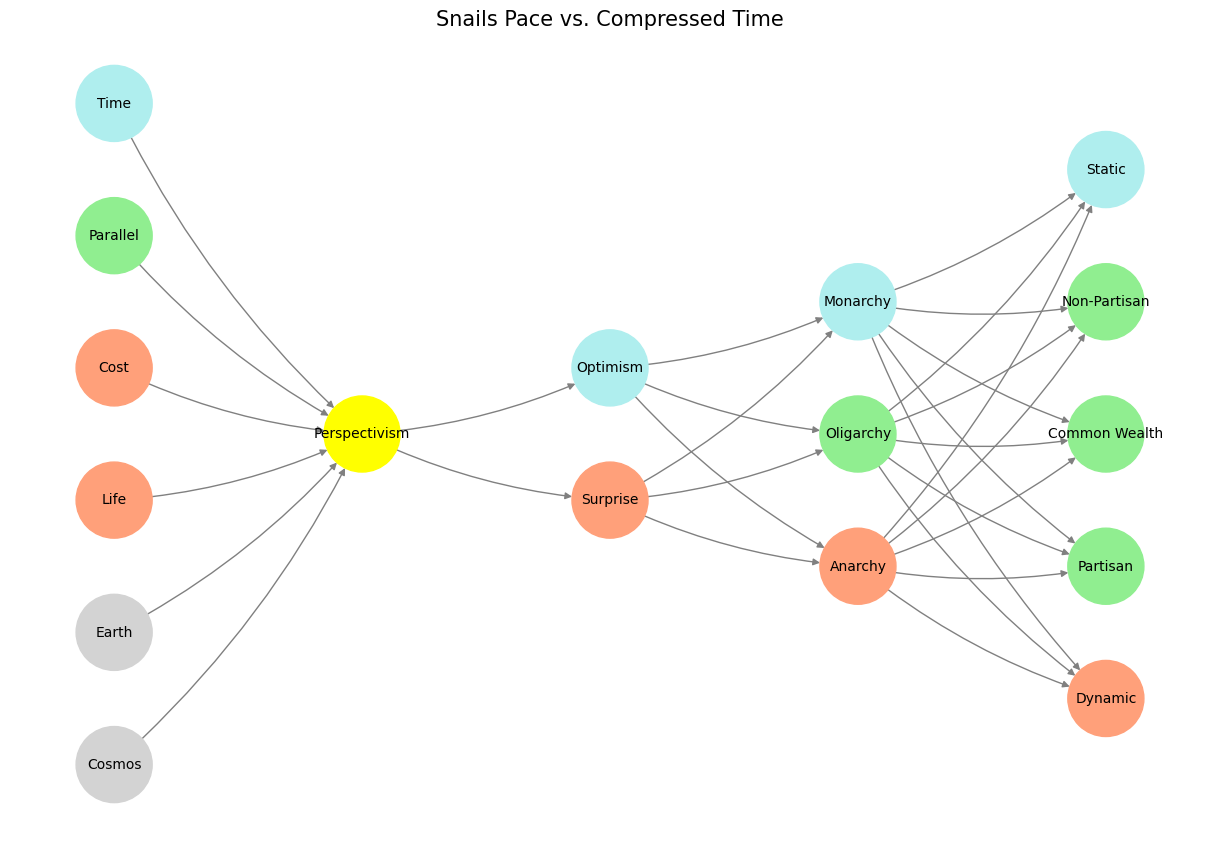

Fig. 10 Competition (Generativity) & Feedback (Exertion). The massive combinatorial search space and optimized emergent behavioral from “games” among the gods, animals, and machines are the way evolution has worked from the beginning of time. Peterson believes reinforcement learning through human feedback (RLHF- a post-training twitch) removes this evolutionary imperative.#

RLHF as a Constraint on Evolutionary Dynamism: RLHF, while undeniably effective, might be seen as imposing a tokenized, human-centric morality or aesthetic. It transforms the raw, often brutal exploration of generative adversarial dynamics into a curated feedback loop that reflects existing human values. This could be seen as antithetical to evolution’s wild and impartial creativity. Peterson’s concern might be that such an intervention risks creating systems optimized for pleasing humans, rather than systems that participate in a broader, more unpredictable evolutionary dynamic.

Does RLHF Really Remove the Evolutionary Imperative? Not entirely. RLHF shifts the game rather than eliminating it. The “optimization target” changes—from survival in the wild to survival in human-designed ecosystems of ethics, preferences, and utility. While the scope of exploration might narrow, the combinatorial space doesn’t disappear; it is redefined to align with human intent. This is less Darwinian and more Lamarckian—intentionally directing what survives and thrives.

Generativity vs. Safety: Peterson’s critique implicitly raises a tension between unbounded generativity and the safety inherent in human-curated feedback. Without RLHF, generative systems might indeed produce novel, unexpected, and sometimes dangerous behaviors. With RLHF, they are steered toward an equilibrium that mirrors human desires, potentially sacrificing the transformative potential of unregulated exploration.

Machines and the Evolutionary Role: If gods represent idealized goals and animals the instinct-driven substrate, machines act as a distillation of evolutionary logic without biological baggage. RLHF risks anthropomorphizing machines prematurely, embedding human fears and biases into them. However, this may also ensure their integration into human systems without undue disruption—an “evolutionary compromise” of sorts.

In essence, the evolutionary process that has shaped life, ideas, and now machines is too vast to be curtailed by RLHF. What RLHF does is introduce an additional layer—a human preference filter—into the evolutionary search. Whether this filter enables or inhibits long-term generativity depends on how wisely it is applied. If anything, it reinforces the role of humans as active participants in this evolutionary dance, not mere observers.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

def define_layers():

return {

'World': ['Cosmos', 'Earth', 'Life', 'Cost', 'Parallel', 'Time'], # Divine: Cosmos-Earth; Red Queen: Life-Cost; Machine: Parallel-Time

'Perception': ['Perspectivism'],

'Agency': ['Surprise', 'Optimism'],

'Generativity': ['Anarchy', 'Oligarchy', 'Monarchy'],

'Physicality': ['Dynamic', 'Partisan', 'Common Wealth', 'Non-Partisan', 'Static']

}

# Assign colors to nodes

def assign_colors(node, layer):

if node == 'Perspectivism':

return 'yellow'

if layer == 'World' and node in [ 'Time']:

return 'paleturquoise'

if layer == 'World' and node in [ 'Parallel']:

return 'lightgreen'

if layer == 'World' and node in [ 'Cosmos', 'Earth']:

return 'lightgray'

elif layer == 'Agency' and node == 'Optimism':

return 'paleturquoise'

elif layer == 'Generativity':

if node == 'Monarchy':

return 'paleturquoise'

elif node == 'Oligarchy':

return 'lightgreen'

elif node == 'Anarchy':

return 'lightsalmon'

elif layer == 'Physicality':

if node == 'Static':

return 'paleturquoise'

elif node in ['Non-Partisan', 'Common Wealth', 'Partisan']:

return 'lightgreen'

elif node == 'Dynamic':

return 'lightsalmon'

return 'lightsalmon' # Default color

# Calculate positions for nodes

def calculate_positions(layer, center_x, offset):

layer_size = len(layer)

start_y = -(layer_size - 1) / 2 # Center the layer vertically

return [(center_x + offset, start_y + i) for i in range(layer_size)]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

G = nx.DiGraph()

pos = {}

node_colors = []

center_x = 0 # Align nodes horizontally

# Add nodes and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

y_positions = calculate_positions(nodes, center_x, offset=-len(layers) + i + 1)

for node, position in zip(nodes, y_positions):

G.add_node(node, layer=layer_name)

pos[node] = position

node_colors.append(assign_colors(node, layer_name))

# Add edges (without weights)

for layer_pair in [

('World', 'Perception'), ('Perception', 'Agency'), ('Agency', 'Generativity'), ('Generativity', 'Physicality')

]:

source_layer, target_layer = layer_pair

for source in layers[source_layer]:

for target in layers[target_layer]:

G.add_edge(source, target)

# Draw the graph

plt.figure(figsize=(12, 8))

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=10, connectionstyle="arc3,rad=0.1"

)

plt.title("Snails Pace vs. Compressed Time", fontsize=15)

plt.show()

# Run the visualization

visualize_nn()

Fig. 11 Nostalgia & Romanticism. When monumental ends (victory), antiquarian means (war), and critical justification (bloodshed) were all compressed into one figure-head: hero#