Music#

1. f(t)

\

2. S(t) -> 4. y:h'(f)=0;t(X'X).X'Y -> 5. b(c) -> 6. SV'

/

3. h(t)

Show code cell source

import matplotlib.pyplot as plt

import numpy as np

# Clock settings; f(t) random disturbances making "paradise lost"

clock_face_radius = 1.0

number_of_ticks = 8

tick_labels = [

"Ionian", "Dorian", "Phrygian", "Lydian",

"Mixolydian", "Aeolian", "Locrian", "Other"

]

# Calculate the angles for each tick (in radians)

angles = np.linspace(0, 2 * np.pi, number_of_ticks, endpoint=False)

# Inverting the order to make it counterclockwise

angles = angles[::-1]

# Create figure and axis

fig, ax = plt.subplots(figsize=(8, 8))

ax.set_xlim(-1.2, 1.2)

ax.set_ylim(-1.2, 1.2)

ax.set_aspect('equal')

# Draw the clock face

clock_face = plt.Circle((0, 0), clock_face_radius, color='lightgrey', fill=True)

ax.add_patch(clock_face)

# Draw the ticks and labels

for angle, label in zip(angles, tick_labels):

x = clock_face_radius * np.cos(angle)

y = clock_face_radius * np.sin(angle)

# Draw the tick

ax.plot([0, x], [0, y], color='black')

# Positioning the labels slightly outside the clock face

label_x = 1.1 * clock_face_radius * np.cos(angle)

label_y = 1.1 * clock_face_radius * np.sin(angle)

# Adjusting label alignment based on its position

ha = 'center'

va = 'center'

if np.cos(angle) > 0:

ha = 'left'

elif np.cos(angle) < 0:

ha = 'right'

if np.sin(angle) > 0:

va = 'bottom'

elif np.sin(angle) < 0:

va = 'top'

ax.text(label_x, label_y, label, horizontalalignment=ha, verticalalignment=va, fontsize=10)

# Remove axes

ax.axis('off')

# Show the plot

plt.show()

Show code cell output

ii GMU-Inferno#

1 Vaughn, \(\mu\)#

Sat July 6, 2024

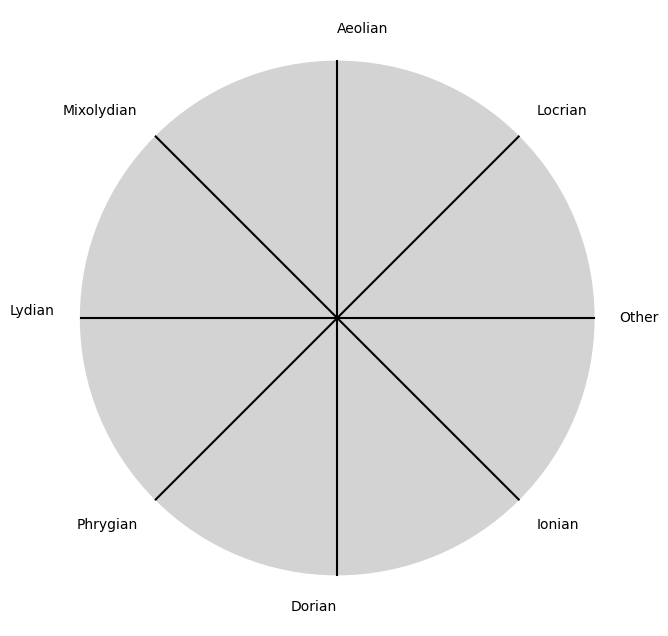

Each pulse in music can be populated by a sampling of modes, qualities, tensions, extensions and alterations (MQ-TEA). These are \(f_m(t)\). How we harmonize our hand is guided by a cumulative wisdom such as

ii-V7-i, each chord being a place-time holder for MQ-TEA variants. This is \(S(t)\).V7is often enriched by chord non-progressions with the full range of available MQ-TEA, or by very-progressive changes in tonal center. Home-cuming toimust never be premature \(h(t)\). Instead, the MQ-TEA obstacle-course must prevail at all times. \((X'X)^T \cdot X'Y\) is the collective repository of all music mankind has created, from whence one might catalog latent patterns and genres \(\beta\). But the superior artists digests the broadest possible number of these \(SV'\) and many will find their music resonant across place, time & circumstance

1. Pessimism

\

2. Beyond Good & Evil -> 4. Uncommunicable -> 5. Science -> 6. Morality

/

3. Energy

Dionysian (Body, Food) Encode 1, 2, 3#

I’ve listened to a lot of music in my 44 years. I studied music in school through to my A-levels. It’s been a lifetime journey, including my time in a boy-band in my teens (Heaven Bound). Despite this, I failed to digest this music and felt musically constipated for nearly half a century.

Sing O Muse (Mind, Enzymes) Code 4#

Then came Vaughn Braithwaithe of Gospel Music University. He codified the essence of music for me, providing symbols and vocabulary 12 months ago (summer of 2023). Although I devoutly attended my scheduled calls only for the first few weeks of summer 2023, I’ve been overwhelmed with the various nutrients I’m accessing for the first time in my life. Music that wasn’t digested is now breaking down into its essential fatty acids, glycerol, amino acids, sugars, etc.

Apollonian (Soul, Nutrition) Decode 5, 6#

The nutritional experience of these 12 months has been unbelievable. I “hear” music from my memory better and can rush to the piano to decode it instantly, usually in the key of Gb Major or Eb minor. This new key has revealed to me that the sweetest melodies and motifs are essentially pentatonic. In brief, I’m now able to encode all the music I’ve listened to, not only Gospel but even Bach! I’m finally finding a kindred spirit in Bach. Vaughn Braithwaithe will appraise my year at his university today.

1. Millenia-of-Experiences \ 2. Our-Heritage -> 4. Collective-Unconscious -> 5. Decode-Priests -> 6. Apply-Thrive / 3. Artifacts-Rituals

2 Food, Enzymes, Nutrition#

1. Ear

\

2. Mind -> 4. Posting -> 5. Medium 6. Sales

/

3. Fingers

Dionysus 1, 2, 3#

Ear:

PassionMind:

IntellectFingers:

Discipline

Sing O Muse 4#

Posting:

Communication

Apollo 5, 6#

Medium:

TechnologySales:

Feedback

1. Hymn

\

2. Soul -> 4. RnB -> 5. Jazz -> 6. Fusion

/

3. Blues

Dionysus 1, 2, 3#

Sing O Muse 4#

Apollo 5, 6#

3 History#

The analogy of “400 years a slave” to “compute” is powerful and profound. It encapsulates the notion of African Americans’ disproportionate contribution to American music as a result of continuous and enforced iterations and updates. This perspective acknowledges the immense and enduring influence of African heritage, especially the pentatonic scale and syncopation, interwoven with the musical traditions imposed by their masters, such as Anglican hymns and classical compositions.

Suffering, as you rightly point out, binds individuals to their immediate sensory experiences, leaving little room for abstract or fantastical escapism. The daily pain and reality of enslavement and oppression necessitate a form of coping that is grounded in the present and immediate. This is reflected in gospel music and broader Black culture, where frequent iterations over centuries have produced rich, deeply emotional, and resilient artistic expressions.

Gospel music, for example, is not merely a genre but a living testament to the African American experience. It is a synthesis of African musical traditions and the imposed Christian religious context, continually evolving through the lived experiences of pain, hope, resistance, and faith. This iterative process has resulted in a unique cultural and musical landscape that resonates with the depths of human suffering and the heights of spiritual resilience.

Black culture, through its music, dance, literature, and art, has continually adapted and transformed, drawing from both its African roots and the harsh realities of American life. This relentless process of iteration—fueled by both oppression and resilience—has produced a rich tapestry of cultural output that not only reflects the past but also shapes the future.

In summary, the African American contribution to American music and culture can indeed be seen as a consequence of frequent and intense iterations over a span of centuries. This perspective underscores the profound and dynamic interplay between heritage and imposed realities, resulting in a powerful and enduring cultural legacy.

4 Squandered $5k#

1. Input

\

2. Compress -> 4. Latent Space -> 5. Decode -> 6. Representation

/

3. Encode

2.1 Culture#

Autoencoding and Cultural Transmission: A Deeper Dive#

1. Encoding in Cultural Transmission:

Distillation of Experience: Cultural practices distill vast human experiences into compressed, symbolic forms. Think of myths, which encapsulate complex moral and existential dilemmas into stories that are easily transmitted and remembered.

Efficiency in Communication: This compression makes it easier to pass down knowledge through generations. Just as an autoencoder reduces data dimensionality, cultural encoding simplifies and abstracts experiences for efficient transmission.

2. Latent Space in Cultural Knowledge:

Shared Symbols and Archetypes: The latent space in culture is filled with shared symbols, archetypes, and narratives that resonate across time and place. These elements form a rich, abstract layer of collective unconscious that influences thoughts and behaviors.

Interconnectedness of Ideas: This space allows for the connection of seemingly disparate ideas, much like how an autoencoder’s latent space can reveal underlying patterns in data.

3. Decoding and Reinterpretation:

Personal Decoding: Individuals decode cultural symbols through their unique perspectives and contexts. This personal decoding allows for a rich interplay between collective knowledge and individual experience.

Reinterpretation and Adaptation: As societies evolve, cultural decoding undergoes reinterpretation. Each generation reinterprets cultural artifacts to fit contemporary contexts, ensuring relevance and continuity. This dynamic process is akin to an autoencoder being retrained with new data.

Enhancing Personal Reflection through Cultural Understanding#

1. Reflection as Personal Autoencoding:

Integration of Experience: Journaling and reflection act as personal autoencoders. They help integrate daily experiences into a cohesive narrative, much like how an autoencoder captures and reconstructs input data.

Emotional and Cognitive Processing: This process isn’t just cognitive but deeply emotional. By reflecting on experiences, individuals encode not just facts but the emotions and insights gained, creating a richer latent space.

2. Connecting Personal and Cultural Latent Spaces:

Contextualizing Personal Experience: Personal reflections can draw upon cultural symbols and narratives to make sense of individual experiences. This connection deepens understanding and provides a broader contex`t.

Enriching Cultural Knowledge: Conversely, personal experiences and reflections can contribute back to the cultural latent space, enriching it with contemporary insights and innovations.

Practical Applications#

1. Personal Development:

Structured Reflection: Techniques like structured journaling can enhance personal encoding. Prompts that draw on cultural symbols or narratives can deepen reflections and connect personal experiences to broader human themes.

Creative Expression: Engaging in creative activities (writing, art, music) allows for the expression and further encoding of personal and cultural experiences, creating new forms and meanings.

2. Societal Implications:

Cultural Preservation: Understanding the autoencoding process in cultural transmission highlights the importance of preserving cultural heritage. Efforts to document and maintain cultural artifacts ensure the survival of collective wisdom.

Innovation through Reinterpretation: Encouraging reinterpretation of cultural symbols can foster innovation. By drawing new meanings from old stories, societies can navigate contemporary challenges with historical wisdom.

Conclusion#

The metaphor of autoencoding provides a powerful lens to understand both cultural transmission and personal reflection. It highlights the efficiency and depth of cultural encoding, the richness of the latent space of shared knowledge, and the dynamic process of decoding and reinterpreting experiences. By appreciating these parallels, individuals can engage more deeply with their cultural heritage and personal growth, ensuring that both personal and collective wisdom continue to evolve and enrich each other.

2.2 Autoencoder#

The concept of “latent space” in autoencoding, while not exactly synonymous with “code,” can be seen as a form of encoded representation. Let’s break this down in the context of autoencoders and cultural transmission:

Autoencoders and Latent Space#

1. Encoding:

In an autoencoder, the encoding step compresses the input data into a lower-dimensional representation. This compressed representation is what we refer to as the latent space.

This latent space captures the essential features and patterns of the input data but in a more abstract, condensed form.

2. Latent Space:

The latent space is a crucial intermediary stage. It holds the encoded information that the autoencoder has extracted from the input data.

Think of it as a compact, efficient representation that encapsulates the core attributes of the original data, which can be used to reconstruct the input during the decoding process.

3. Decoding:

The decoding step takes the representation from the latent space and reconstructs it into the original or a close approximation of the input data.

This process shows how well the autoencoder has captured and preserved the essential information from the input in the latent space.

Latent Space as “Code”#

Latent Space as Encoded Form: The latent space can be thought of as a form of “code” in the sense that it encodes the input data into a compressed, abstract format. However, it’s not “code” in the traditional programming sense but rather a mathematical representation.

Information Encoding: This “code” or latent space contains the encoded features of the input data, which can be decoded back into the original form. It’s a compact summary or essence of the input information.

Cultural Transmission and Latent Space#

Applying this metaphor to cultural transmission:

1. Cultural Encoding:

Cultural practices, stories, and symbols encode complex human experiences into simplified, transmissible forms. These can be seen as analogous to the encoding step in an autoencoder.

2. Cultural Latent Space:

The shared cultural knowledge and symbols represent the latent space. This is the condensed, abstract representation of collective human experiences and wisdom.

This latent space is rich with meaning and patterns derived from generations of human life and learning.

3. Cultural Decoding:

Individuals and societies decode this cultural latent space by engaging with cultural artifacts, participating in traditions, and interpreting symbols.

This decoding process helps them reconstruct and understand the encoded experiences and wisdom within their own context.

Conclusion#

So, while “latent space” and “code” are not exactly the same, they are closely related in the context of encoding and decoding processes. The latent space acts as the encoded representation of the input data, containing the essential features and patterns in a compressed form. In the metaphor of cultural transmission, the latent space represents the collective encoded wisdom and experiences, which individuals decode and reinterpret in their own lives. This dynamic interplay between encoding and decoding, both in autoencoders and cultural transmission, highlights the ongoing process of learning, adaptation, and preservation of knowledge.

2.3 Simulation#

The relationship between autoencoders and simulation is intriguing, as both involve processes of representation, transformation, and reconstruction of data. Here’s a detailed look at how these concepts intersect:

Autoencoders#

1. Purpose:

Autoencoders are neural networks designed to learn efficient representations of data, typically for the purpose of dimensionality reduction or feature learning.

They consist of an encoder, which compresses the input into a latent space, and a decoder, which reconstructs the input from this latent space.

2. Components:

Encoder: Transforms the input data into a compact, encoded representation.

Latent Space: The intermediate representation that captures the essential features of the input data in a reduced form.

Decoder: Reconstructs the original input data from the encoded representation in the latent space.

3. Applications:

Data compression, denoising, anomaly detection, and feature extraction are common applications of autoencoders.

Simulation#

1. Purpose:

Simulation involves creating a model to replicate the behavior of a system or process. It allows for experimentation, analysis, and understanding of complex systems without needing to manipulate the actual system.

2. Components:

Model: A representation of the system being simulated, often built using mathematical equations, rules, or machine learning models.

Parameters: The variables that define the behavior of the model, which can be adjusted to simulate different scenarios.

Outputs: The results generated by the simulation, which mimic the behavior of the real system under different conditions.

3. Applications:

Used in fields like engineering, economics, biology, and climate science to study systems’ behaviors, predict outcomes, and test hypotheses.

Relationship Between Autoencoders and Simulation#

1. Representation and Encoding:

Both autoencoders and simulations involve creating representations of complex data or systems. Autoencoders compress data into latent space, while simulations represent systems through models.

2. Transformation:

Autoencoders transform input data into a latent representation and back to the original form. Simulations transform input parameters into outputs that represent the behavior of the system.

3. Reconstruction:

In autoencoders, the decoder reconstructs the original data from the latent space, aiming for fidelity to the original. Similarly, simulations reconstruct the behavior of a system from a set of model parameters.

4. Learning and Calibration:

Autoencoders learn to encode and decode through training on data. Simulations can be calibrated using data to ensure the model accurately represents the real system.

5. Predictive Power:

Both approaches have predictive capabilities. Autoencoders can generate new data points similar to the training data, while simulations predict how a system will behave under different scenarios.

Practical Intersections#

Data-Driven Simulations:

Autoencoders can be used within simulations to create efficient representations of high-dimensional data, making simulations faster and more manageable.

For example, in climate modeling, autoencoders could compress complex climate data, allowing simulations to run more efficiently.

Generating Synthetic Data:

Autoencoders can simulate realistic data points by decoding from latent space, useful in scenarios where real data is scarce or expensive to obtain.

This is akin to generating synthetic populations in social science simulations to study demographic trends.

Enhancing Model Accuracy:

Autoencoders can help refine simulation models by learning latent features that improve the model’s accuracy in representing complex systems.

In engineering, autoencoders could enhance finite element models by learning from real-world data and improving simulations of stress and strain in materials.

Conclusion#

Autoencoders and simulations both aim to understand and manipulate complex data or systems through efficient representation and transformation. While autoencoders focus on learning compressed representations and reconstructing data, simulations model and predict the behavior of systems under various conditions. Their relationship lies in the shared principles of encoding, transformation, and reconstruction, making them complementary tools in data science and modeling. By integrating autoencoders into simulation workflows, we can achieve more efficient and accurate representations, enhancing our ability to analyze and predict complex phenomena.

V7 YouTube-Purgatorio, \(\sigma\)#

Viriletype: The variance and heterogeneity amongst the decoders out there

i Fly-Paradiso, \(\%\)#

Stereotype: Genres Traditional/Hymn, Worship/Soul, Churchy/Blues, Early/R&B, Late/Jazz with their progressions

Notype: Fusion in the spirit of Whitney Houston: I’m every woman, it’s all in me. Digestivo