Probability, ∂Y 🎶 ✨ 👑#

+ Expand

- The first portrait of Gen. Milley, from his time as the U.S. military's top officer, was removed from the Pentagon last week on Inauguration Day less than two hours after President Trump was sworn into office.

- The now retired Gen. Milley and other former senior Trump aides had been assigned personal security details ever since Iran vowed revenge for the killing of Qasem Soleimani in a drone strike in 2020 ordered by Trump in his first term.

- On "Fox News Sunday," the chairman of the Senate Intelligence Committee, Tom Cotton, said he hoped President Trump would "revisit" the decision to pull the protective security details from John Bolton, Mike Pompeo, and Brian Hook who previously served under Trump.

- A senior administration official who requested anonymity replied, "There is a new era of accountability in the Defense Department under President Trump's leadership—and that's exactly what the American people expect."

- Gen. Milley served as chairman of the Joint Chiefs of Staff from 2019 to 2023 under both Presidents Trump and Biden.

-- Fox News

The integration of Large Language Models (LLMs) into medicine presents both profound opportunities and formidable challenges. Mark Dredze’s extensive work in machine learning, natural language processing (NLP), and health informatics lays the foundation for understanding how LLMs can revolutionize healthcare while exposing critical vulnerabilities in their deployment. The future of LLMs in medicine hinges on our ability to navigate these complexities, ensuring that advances in computational epidemiology, clinical decision support, and patient engagement translate into real-world benefits rather than exacerbating existing disparities.

🪙 🎲 🎰 🗡️ 🪖 🛡️#

One of the most promising applications of LLMs in medicine is their potential to enhance diagnostic accuracy and decision-making. The comparative study by Ayers et al. (2023), which evaluated physician responses against AI chatbots in public social media forums, revealed that AI-generated responses often matched or exceeded physician accuracy in answering patient queries. This finding suggests that LLMs could be harnessed to augment physician workloads, streamline consultations, and provide immediate preliminary assessments. However, the deployment of such models in clinical settings necessitates rigorous evaluation of their reliability, especially given the risk of generating plausible but incorrect responses.

Fig. 1 Digital Library. Our color-coded QR code library with a franchize model for the digital twin will be launched in a month. The books will explore struggle, exchange, and consolidation as dynamic equilibria that emerge from the oscillation and rhythm of existence.#

😓#

A core challenge in medical LLMs is their susceptibility to misinformation and bias. Dredze’s research on weaponized health communication (Broniatowski et al., 2018) underscores how automated systems can be exploited to spread misinformation—particularly in controversial domains such as vaccination. If LLMs are trained on datasets polluted with misleading health claims, they risk perpetuating rather than mitigating misinformation. Addressing this requires robust data curation strategies and real-time model validation frameworks that can detect and correct deviations from evidence-based medical knowledge.

🌊 🏄🏾#

Moreover, the interpretability of LLMs in medicine remains an ongoing concern. Unlike traditional clinical decision-support tools, which operate on transparent rule-based logic, LLMs function as black boxes, making it difficult to audit their reasoning processes. The adaptive regularization techniques explored by Crammer, Kulesza, and Dredze (2009) offer a potential pathway to increasing model accountability, but further research is needed to ensure that AI-driven medical recommendations are both interpretable and trustworthy.

🤺 💵 🛌#

The use of LLMs for mental health applications highlights both the power and pitfalls of AI-driven medicine. Dredze’s collaborations in analyzing mental health signals on Twitter (Coppersmith et al., 2014) and tracking shifts in suicidal ideation (De Choudhury et al., 2016) demonstrate that social media-derived data can offer valuable public health insights. However, the transition from population-level surveillance to individual patient interventions introduces ethical concerns. The automation of mental health assessments could provide early-warning systems for high-risk individuals, but it also raises questions about privacy, consent, and the potential for overreach in algorithmic monitoring.

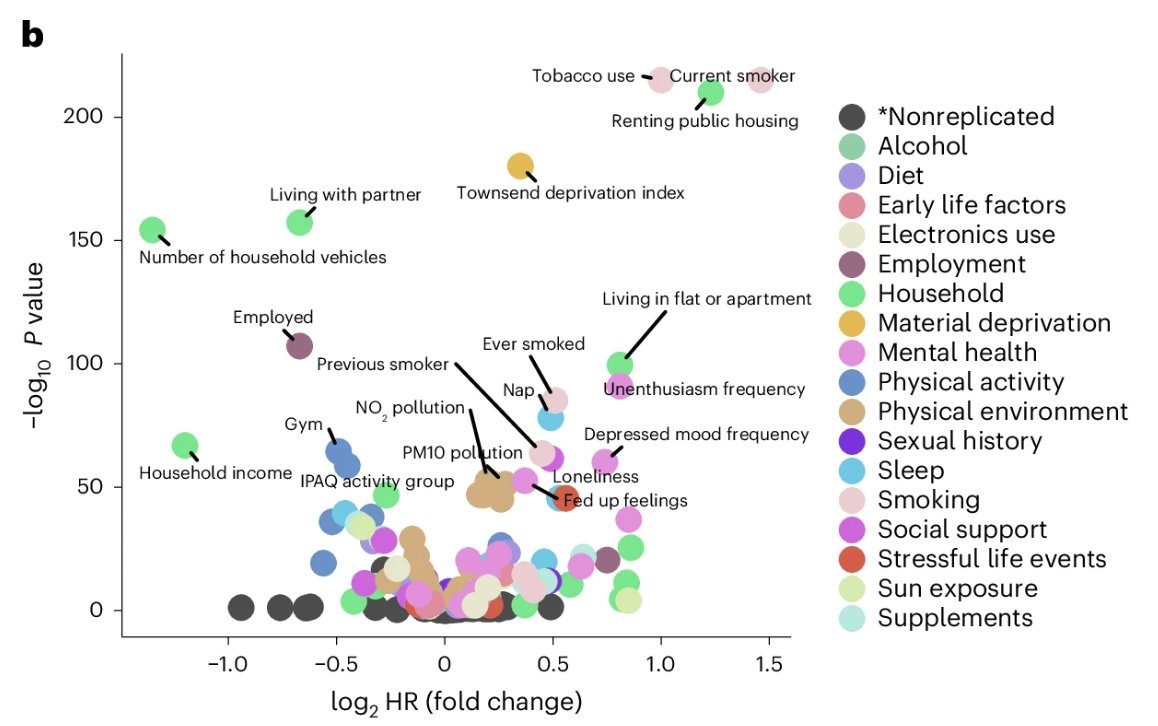

Fig. 2 Lifestyle is a stronger predictor of aging and mortality than genetics. A new Nature study of ~500,000 people found: 🎯 17% of mortality variation was linked to lifestyle (exposome 🚬 🥃 ). 🧬 <2% of was explained by genetics. Here’s what you need to know ☝🏾 🧵 1/10#

🏇 🧘🏾♀️ 🔱 🎶 🛌#

Another domain in which LLMs can have an immediate impact is epidemiology. Traditional surveillance methods often lag behind real-world outbreaks, as seen in the flu tracking studies conducted by Broniatowski, Paul, and Dredze (2013). By integrating LLMs into public health surveillance, researchers could develop real-time systems that synthesize diverse data sources—including electronic health records, patient-reported symptoms, and social media trends—to predict disease spread with unprecedented granularity. However, the integration of LLMs into epidemiological frameworks necessitates careful attention to data security, ensuring that sensitive patient information is safeguarded against potential breaches or misuse.

As we look toward the future, the role of LLMs in medicine must be guided by a synthesis of computational innovation and ethical oversight. Key challenges such as bias mitigation, interpretability, and misinformation control must be addressed in parallel with technological advancements. Dredze’s interdisciplinary approach—spanning health informatics, NLP, and machine learning—provides a model for how academia, industry, and policy can collaborate to shape the responsible deployment of AI in healthcare. If leveraged correctly, LLMs have the potential to redefine medical practice, transforming the way physicians diagnose, treat, and interact with patients in an increasingly digitized world.

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network layers

def define_layers():

return {

'Suis': ['Foundational, 5%', 'Grammar', 'Nourish It', 'Know It', "Move It", 'Injure It'], # Static

'Voir': ['Gate-Nutrition, 15%'],

'Choisis': ['Prioritize-Lifestyle, 50%', 'Basal Metabolic Rate'],

'Deviens': ['Unstructured-Intense', 'Weekly-Calendar', 'Refine-Training, 25%'],

"M'èléve": ['NexToken Prediction', 'Hydration', 'Fat-Muscle Ratio', 'Visceral-Fat, 5%', 'Existential Cadence']

}

# Assign colors to nodes

def assign_colors():

color_map = {

'yellow': ['Gate-Nutrition, 15%'],

'paleturquoise': ['Injure It', 'Basal Metabolic Rate', 'Refine-Training, 25%', 'Existential Cadence'],

'lightgreen': ["Move It", 'Weekly-Calendar', 'Hydration', 'Visceral-Fat, 5%', 'Fat-Muscle Ratio'],

'lightsalmon': ['Nourish It', 'Know It', 'Prioritize-Lifestyle, 50%', 'Unstructured-Intense', 'NexToken Prediction'],

}

return {node: color for color, nodes in color_map.items() for node in nodes}

# Define edge weights (hardcoded for editing)

def define_edges():

return {

('Foundational, 5%', 'Gate-Nutrition, 15%'): '1/99',

('Grammar', 'Gate-Nutrition, 15%'): '5/95',

('Nourish It', 'Gate-Nutrition, 15%'): '20/80',

('Know It', 'Gate-Nutrition, 15%'): '51/49',

("Move It", 'Gate-Nutrition, 15%'): '80/20',

('Injure It', 'Gate-Nutrition, 15%'): '95/5',

('Gate-Nutrition, 15%', 'Prioritize-Lifestyle, 50%'): '20/80',

('Gate-Nutrition, 15%', 'Basal Metabolic Rate'): '80/20',

('Prioritize-Lifestyle, 50%', 'Unstructured-Intense'): '49/51',

('Prioritize-Lifestyle, 50%', 'Weekly-Calendar'): '80/20',

('Prioritize-Lifestyle, 50%', 'Refine-Training, 25%'): '95/5',

('Basal Metabolic Rate', 'Unstructured-Intense'): '5/95',

('Basal Metabolic Rate', 'Weekly-Calendar'): '20/80',

('Basal Metabolic Rate', 'Refine-Training, 25%'): '51/49',

('Unstructured-Intense', 'NexToken Prediction'): '80/20',

('Unstructured-Intense', 'Hydration'): '85/15',

('Unstructured-Intense', 'Fat-Muscle Ratio'): '90/10',

('Unstructured-Intense', 'Visceral-Fat, 5%'): '95/5',

('Unstructured-Intense', 'Existential Cadence'): '99/1',

('Weekly-Calendar', 'NexToken Prediction'): '1/9',

('Weekly-Calendar', 'Hydration'): '1/8',

('Weekly-Calendar', 'Fat-Muscle Ratio'): '1/7',

('Weekly-Calendar', 'Visceral-Fat, 5%'): '1/6',

('Weekly-Calendar', 'Existential Cadence'): '1/5',

('Refine-Training, 25%', 'NexToken Prediction'): '1/99',

('Refine-Training, 25%', 'Hydration'): '5/95',

('Refine-Training, 25%', 'Fat-Muscle Ratio'): '10/90',

('Refine-Training, 25%', 'Visceral-Fat, 5%'): '15/85',

('Refine-Training, 25%', 'Existential Cadence'): '20/80'

}

# Calculate positions for nodes

def calculate_positions(layer, x_offset):

y_positions = np.linspace(-len(layer) / 2, len(layer) / 2, len(layer))

return [(x_offset, y) for y in y_positions]

# Create and visualize the neural network graph

def visualize_nn():

layers = define_layers()

colors = assign_colors()

edges = define_edges()

G = nx.DiGraph()

pos = {}

node_colors = []

# Create mapping from original node names to numbered labels

mapping = {}

counter = 1

for layer in layers.values():

for node in layer:

mapping[node] = f"{counter}. {node}"

counter += 1

# Add nodes with new numbered labels and assign positions

for i, (layer_name, nodes) in enumerate(layers.items()):

positions = calculate_positions(nodes, x_offset=i * 2)

for node, position in zip(nodes, positions):

new_node = mapping[node]

G.add_node(new_node, layer=layer_name)

pos[new_node] = position

node_colors.append(colors.get(node, 'lightgray'))

# Add edges with updated node labels

for (source, target), weight in edges.items():

if source in mapping and target in mapping:

new_source = mapping[source]

new_target = mapping[target]

G.add_edge(new_source, new_target, weight=weight)

# Draw the graph

plt.figure(figsize=(12, 8))

edges_labels = {(u, v): d["weight"] for u, v, d in G.edges(data=True)}

nx.draw(

G, pos, with_labels=True, node_color=node_colors, edge_color='gray',

node_size=3000, font_size=9, connectionstyle="arc3,rad=0.2"

)

nx.draw_networkx_edge_labels(G, pos, edge_labels=edges_labels, font_size=8)

plt.title("OPRAH™: Existential Rupert Cadence", fontsize=25)

plt.show()

# Run the visualization

visualize_nn()

Fig. 3 Grammar is the Ecosystem. It is not merely a set of rules but the living architecture of expression, where phonetics sketches the raw material of sound (5%), syntax assembles structure and hierarchy (15%), melody breathes life and motion (50%), rhythm imposes order and inevitability (25%), and cadence seals the imprint of finality (5%). This is the calculus of language, the unseen scaffolding beneath poetry, music, and even the genome itself—an interplay of inherited structure and emergent dynamism. To those with long legs, those who see farther, this is no mere taxonomy of speech but the very logic of existence, the encoded grammar of fate itself. For what is language but a genome of thought, an exposome of influence, a pokémon of captured energies, evolving through dynamism and necessity, bending syntax into meaning as nature bends species into survival?#