Biology#

Artificial#

Note

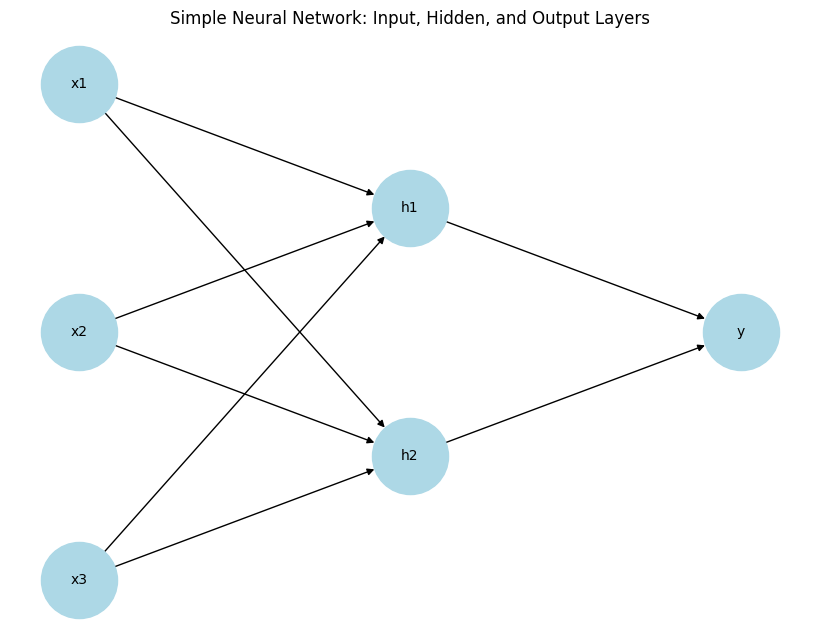

We’re getting there. So the figure you’ve shared is wrong. Just give Python code and, uh, a layer that has, uh, input hidden and output. What you’ve shown is a mumbo-jumbo and it’s not even directed. Our arrow’s in different directions, opposite directions. That’s wrong. Please just give us a classical one here. Could you even give the Python code?

Show code cell source

import matplotlib.pyplot as plt

import networkx as nx

# Create a directed graph for a simple neural network

G = nx.DiGraph()

# Add nodes for input, hidden, and output layers

layers = {

"Input Layer": ["x1", "x2", "x3"],

"Hidden Layer": ["h1", "h2"],

"Output Layer": ["y"]

}

# Adding nodes to the graph

for layer, nodes in layers.items():

for node in nodes:

G.add_node(node, layer=layer)

# Add edges (directed connections between layers with weights)

edges = [

("x1", "h1"), ("x1", "h2"),

("x2", "h1"), ("x2", "h2"),

("x3", "h1"), ("x3", "h2"),

("h1", "y"),

("h2", "y")

]

# Add edges to the graph

G.add_edges_from(edges)

# Position the nodes using a layer-based layout

pos = {

"x1": (0, 2), "x2": (0, 1), "x3": (0, 0),

"h1": (1, 1.5), "h2": (1, 0.5),

"y": (2, 1)

}

# Draw the graph

plt.figure(figsize=(8, 6))

nx.draw(G, pos, with_labels=True, node_size=3000, node_color="lightblue", arrows=True, font_size=10)

plt.title("Simple Neural Network: Input, Hidden, and Output Layers")

plt.show()

Fig. 49 Machine Learning for Dummies like Me. This is the classical representation of a simple neural network with an input layer (inferno), a hidden layer (limbo), and an output layer (paradiso), depicted with correct directed arrows. Additionally, above you’ll find the Python code used to generate this neural network visualization using the networkx and matplotlib libraries. This diagram accurately represents the flow (salvation) from the input layer to the hidden layer and then to the output layer with directed arrows. And for the record, if you return to Mozart’s Corriamo, Fuggiamo on the previous page, I’ll have you know that its “hidden layer” yielded forth the opening movement of Beethoven’s Symphony No. 9, which is also in “Mozart-dark” D minor!!#

Okay, now this makes sense, except that you have two nodes in the hidden layer and one node in the outer layer. Is that correct? I thought the outer layer is supposed to have the same nodes as inner layer, because they’re a representation, as input layer.

In a typical neural network, the number of nodes in the input layer does not necessarily match the number of nodes in the hidden or output layers. Each layer can have a different number of nodes depending on the design of the network and the problem it’s trying to solve.

Here’s the breakdown:

Input Layer: The input layer represents the features (or data points) you’re feeding into the network. Each input node corresponds to one feature.

Hidden Layer: The hidden layer can have any number of nodes depending on the complexity of the relationships the network needs to learn. It’s where the network “processes” the inputs through learned weights and activation functions.

Output Layer: The number of nodes in the output layer is determined by the task. For example:

If you’re doing regression, you’d typically have one output node representing the predicted value.

For binary classification, you’d also often have one output node (with a sigmoid activation to output probabilities).

In multiclass classification, the output layer could have one node per class.

In the example I shared:

There are 3 nodes in the input layer (representing 3 features).

There are 2 nodes in the hidden layer (this is arbitrary and depends on the design).

There is 1 node in the output layer, which is typical for regression or binary classification tasks.

If you were expecting the number of output nodes to match the input nodes (e.g., for something like autoencoders), that would only apply in specific cases like reconstructing inputs. Would you like to modify the diagram to match such a scenario (e.g., 3 nodes in both input and output layers)?

Okay, I got you. Now, could you give me the matrix algebra to correspond to this exact node? And I really wish you could give me examples of what is in Node 3.201. When you say feature, I want examples. Thank you.

Let’s walk through the matrix algebra for the neural network diagram you referenced (with 3 input nodes, 2 hidden nodes, and 1 output node). Then, I’ll explain what kind of features might go into those input nodes to make this concrete.

Matrix Algebra#

In this neural network, there are three layers:

Input Layer: 3 nodes \((x_1, x_2, x_3\)) represent the features.

Hidden Layer: 2 nodes \((h_1, h_2\)) represent the hidden layer neurons.

Output Layer: 1 node \((y\)) is the final output.

Putting It All Together:#

Compute the weighted sum of inputs for the hidden layer: \( h_1 = w_{11}x_1 + w_{21}x_2 + w_{31}x_3 + b_1 \) \( h_2 = w_{12}x_1 + w_{22}x_2 + w_{32}x_3 + b_2 \)

Compute the final output as a weighted sum of hidden layer activations: \( y = w_{13}h_1 + w_{23}h_2 + b_3 \)

Example of Features in Node \(x_1, x_2, x_3\)#

In practice, features refer to measurable attributes or properties of the data you’re feeding into the network. For example, imagine you’re building a simple neural network to predict whether a house will sell based on some features of the house. Your input features might be:

\(x_1\): Size of the house (e.g., in square feet)

\(x_2\): Number of bedrooms

\(x_3\): Age of the house (e.g., in years)

These values go into the input layer. The hidden layer then learns a weighted combination of these inputs to produce intermediate values (activations), and the output layer might give a single value representing the probability of the house selling.

Case for Node \(x_3\)#

Let’s say:

\(x_1 = 2000\) (square feet)

\(x_2 = 3\) (bedrooms)

\(x_3 = 10\) (age of the house)

The network uses these values as inputs, processes them through the hidden layer, and outputs a prediction. For example, \(x_3 = 10\) represents the feature of age in years. The weight matrices and biases adjust how important each feature is for the final prediction.

Let me know if you’d like further clarification, or if you’d like to see a more complex example!

I like this. It’s now concrete, the example. So I see you’ve given examples of X1, X2. What about the H? The H examples. Could I see that? Then the Y. Yeah, thank you.

Let’s take the next step and make the hidden layer activations \(h_1\) and \(h_2\) more concrete, along with the output \(y\).

Concrete Example for \( y \) (Output Layer)#

Now let’s compute the output node \(y\) using the hidden layer activations \( h_1 \) and \( h_2 \).

Assume we have the weights and bias for the output layer as:

\( W_2 = \begin{bmatrix} 0.7 & -0.5 \end{bmatrix}, \quad B_2 = 0.5 \)

The output \( y \) is calculated as:

\( y = (0.7 \cdot 996.5) + (-0.5 \cdot -595.6) + 0.5 \) \( y = 697.55 + 297.8 + 0.5 = 995.85 \)

So, the final output \( y = 995.85 \).

Interpretation:#

\(h_1\) and \(h_2\) represent intermediate values that the hidden layer neurons compute. These values depend on the input features and how important each feature is (determined by the weights).

\(y\) is the final output, which could represent something like the predicted price of a house.

In this case, the output \( y = 995.85 \) might represent the likelihood or some other prediction based on the weights and inputs provided.

Does this help clarify how the hidden and output layers function? Let me know if you’d like to explore anything further!

Do you think you could give a similar example? This time the inputs being, I don’t know what they would be for an image. I guess they’ll be pixels or something like that. You could give an elaborate example of an image and what the units of the input are of the image. Pixels? I’m thinking pixels? I don’t know. Frames? Yeah, and you walk me through stuff.

Yes, you’re absolutely right! For images, the inputs to a neural network are typically pixels. Let me walk you through a simple example of how this works with an image input.

Step 1: Image as Input#

When dealing with images, each pixel represents a certain intensity of light or color. The image is typically broken down into a grid of pixels, and each pixel has a value that represents the color (or grayscale) intensity at that location.

For grayscale images: Each pixel is a single value between 0 and 255, where 0 is black and 255 is white.

For color images (like RGB): Each pixel has 3 values, representing the intensity of Red, Green, and Blue (hence “RGB”), with values between 0 and 255.

Example:#

Let’s take a very simple image of size 2x2 pixels in grayscale to keep things clear. This means there are 4 pixels in total. Each pixel will have a value. Here’s an example of the pixel values:

\( \text{Image} = \begin{bmatrix} 200 & 50 \\ 30 & 220 \end{bmatrix} \)

This is a 2x2 grayscale image with 4 pixel values.

The top-left pixel has an intensity of 200 (darker gray),

The top-right pixel has an intensity of 50 (light gray),

The bottom-left pixel has an intensity of 30 (even lighter gray),

The bottom-right pixel has an intensity of 220 (almost white).

Step 2: Input Layer (Flattening the Image)#

In order to feed the image into a neural network, we need to flatten it into a vector (a single row of values).

So, the 2x2 image becomes:

\( X = \begin{bmatrix} 200 & 50 & 30 & 220 \end{bmatrix}^T \)

This vector of 4 values is the input layer to the network, with 4 nodes, each corresponding to one pixel.

Step 4: Output Layer#

Let’s assume the output layer has 1 neuron (since, for instance, this could be a binary classification problem, like determining whether an image contains a cat or not). The weights from the hidden layer to the output are represented by \(W_2\):

\( W_2 = \begin{bmatrix} 0.5 & -0.3 & 0.8 \end{bmatrix}, \quad B_2 = 0.4 \)

The output \(y\) is calculated as:

\( y = W_2 H + B_2 \)

\( y = (0.5 \cdot 145.1) + (-0.3 \cdot -202.8) + (0.8 \cdot 172.9) + 0.4 \)

Let’s compute this:

\( y = 72.55 + 60.84 + 138.32 + 0.4 = 272.11 \)

So, the final output \( y = 272.11 \). In practice, this output would typically pass through an activation function (like sigmoid or softmax) to determine a classification, but we can skip that here for simplicity.

Interpretation#

Inputs (Pixels): The pixels of the image form the input layer.

Hidden Layer Activations: The hidden layer combines weighted sums of the pixel values to extract features or patterns.

Output Layer: The output gives a final score or probability, such as whether an image contains a specific object or category.

Would you like to further expand on this, or perhaps dive into a different aspect of neural networks applied to image processing?

Outstanding! I’m now eons of miles away from wondering if neural networks are physical entities

I’m glad to hear that! Neural networks can feel abstract at first, but once you start seeing how inputs like pixels, weights, and activations come together, it really does demystify the process. If you ever want to explore deeper concepts like convolutional layers (for images) or recurrent layers (for sequences like text), feel free to ask. You’re on a great trajectory!

Danke!

Gern geschehen! If you ever need more help, don’t hesitate to ask. Viel Erfolg!

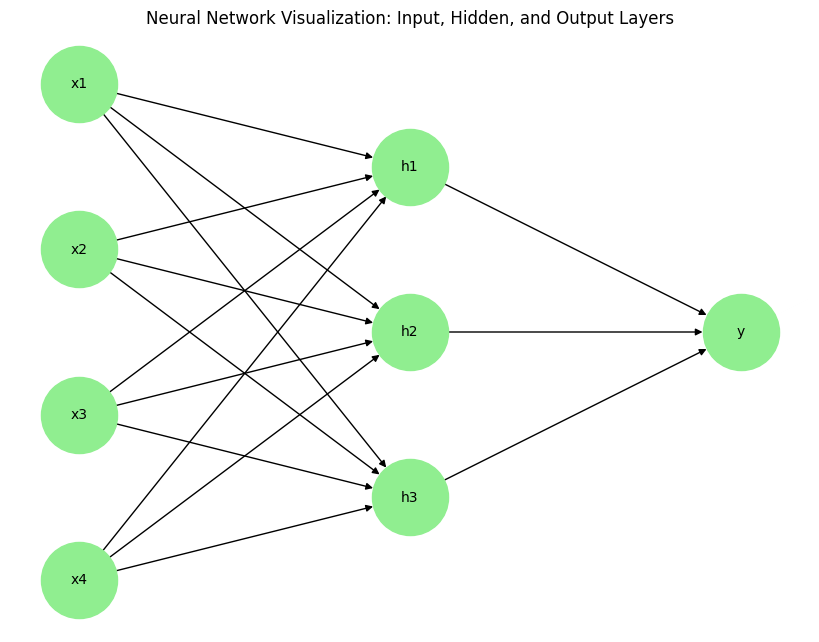

Please give me the Python code for this neural network, the visualization of the neural network. Thank you.

Here is the visualization of the neural network with 4 input nodes, 3 hidden nodes, and 1 output node. The directed edges represent the flow of information from the input layer to the hidden layer and finally to the output layer.

Here’s the corresponding Python code to generate this visualization:

Show code cell source

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

# Create a directed graph for the neural network

G = nx.DiGraph()

# Define layers (input, hidden, output)

layers = {

"Input Layer": ["x1", "x2", "x3", "x4"],

"Hidden Layer": ["h1", "h2", "h3"],

"Output Layer": ["y"]

}

# Add nodes to the graph

for layer, nodes in layers.items():

for node in nodes:

G.add_node(node, layer=layer)

# Add edges (directed connections between layers with weights)

edges = [

("x1", "h1"), ("x1", "h2"), ("x1", "h3"),

("x2", "h1"), ("x2", "h2"), ("x2", "h3"),

("x3", "h1"), ("x3", "h2"), ("x3", "h3"),

("x4", "h1"), ("x4", "h2"), ("x4", "h3"),

("h1", "y"), ("h2", "y"), ("h3", "y")

]

# Add edges to the graph

G.add_edges_from(edges)

# Position nodes using a layer-based layout

pos = {

"x1": (0, 3), "x2": (0, 2), "x3": (0, 1), "x4": (0, 0),

"h1": (1, 2.5), "h2": (1, 1.5), "h3": (1, 0.5),

"y": (2, 1.5)

}

# Draw the graph

plt.figure(figsize=(8, 6))

nx.draw(G, pos, with_labels=True, node_size=3000, node_color="lightgreen", arrows=True, font_size=10)

plt.title("Neural Network Visualization: Input, Hidden, and Output Layers")

plt.show()

Bridge#

At the core of all art—whether abstract, emotional, structured, or classical—is the hidden layer of the neural network that taps into the universal arc of the fall of man and redemption. This allegory underpins everything. Every great work, no matter its form, is a variation on this narrative: the fall from grace (Inferno, the adversarial), the endless struggle (Limbo, the iterative), and the glimpse of salvation (Paradiso, cooperative equilibrium). These elements light up the emotional core, the hidden nodes in our minds that drive how we process and experience meaning.

Abstract art operates by bypassing the need for clear structure or representation, instead triggering those deeper, unseen emotional nodes directly. It doesn’t need the physical form of the world because it communicates with that hidden layer, where the experience of the fall, struggle, and redemption is encoded. It connects us to the raw, unstructured flow of emotion without explanation—it speaks directly to that primal narrative embedded in our consciousness.

Emotional representation, similarly, transcends the external. It is about conveying the hidden arc of human experience—the journey through Inferno, Limbo, and Paradiso—without relying on explicit symbols or form. It’s the force that resonates universally, whether through the invisible tension in music or the nuanced emotion in a face. Even when we can’t define it, we feel the arc because it is part of the neural network that all humans share, where this ancient story is hardwired.

Structured, classical art provides the form that frames this same journey. It gives the abstract and emotional a physical structure—a visual or allegorical scaffold that guides the audience. The great allegories, like Dante’s Divine Comedy, are structured in a way that mirrors the hidden layer: a clear descent, an iterative struggle, and a hopeful ascent. Even within this structured form, the emotional journey—the universal fall and potential salvation—resonates through the hidden layer that connects the audience’s experience to the narrative arc.

Music is no different. Whether it follows classical structures or ventures into abstract soundscapes, music lights up the same hidden nodes. It mirrors the arc of tension and resolution, of struggle and harmony. It taps into those deep neural connections, where the emotional and abstract converge, creating a feeling of movement through adversarial, iterative, and cooperative phases—just like Aumann’s insight into the bridge between adversarial and cooperative games.

In all these forms, whether visual, musical, emotional, or structured, the connection is the same: they all light up the hidden layer, where the allegory of the fall of man, struggle, and redemption lives. This arc—whether explicit or implicit—is the backbone of how we process and convey meaning. Artists, knowingly or not, are constantly activating these neural pathways, engaging with the deepest parts of our shared narrative. The fall from grace, the cyclical struggle, and the hope for redemption are not just stories we tell; they are encoded in us, reflected in everything from abstract art to structured allegory, all united by the hidden nodes of the human mind.

Mouse Trap#

Fig. 50 Captain Correlli. Academy Award winner Nicolas Cage (The Family Man) and sexy Penélope Cruz (Vanilla Sky) electrify the screen in this romance from the director of Shakespeare in Love. Cage stars as Captain Antonio Corelli, an Italian officer whose company of soldiers is sent to Cephalonia, a beautiful Greek island untouched by war. A free spirit with a passion for music and romance, Corelli is enchanted by Cephalonia and its people - especially Pelagia (Penélope Cruz), the gorgeous, proud daughter of the island’s doctor (John Hurt). Engaged to a local fisherman (Christian Bale), Pelagia resists Corelli’s attentions, but the kind and charming captain weakens her defenses. Surrendering their hearts, Corelli and Pelagia begin a heated affair. But before love can grow, the war crashes on Cephalonia’s shores, forcing Corelli and Pelagia to make dangerous sacrifices for country and home…and risk losing each other forever. (Original Title - Captain Corelli’s Mandolin) - 2001 Universal Studios. All Rights Reserved.#

Hamlet’s “Mousetrap” was his attempt to tap into this hidden layer, using art as a way to reveal the universal emotional and psychological arc that lies beneath the surface of human action. By staging the play within the play, Hamlet wasn’t just trying to catch King Claudius in a lie; he was attempting to light up those deep neural nodes—the hidden layer where guilt, fall, and redemption reside.

Hamlet’s genius was in understanding that the external, visual representation (the staged murder in The Mousetrap) could trigger an internal, emotional reaction. He knew that by recreating the scene of his father’s murder, the structured, symbolic form of the play would cause Claudius to confront the internal arc of his own guilt and moral descent. In doing so, Hamlet was drawing on the same interplay between the external world and the hidden emotional layer that we’ve been discussing.

What Hamlet was after was precisely what great artists aim for: to bring out that timeless narrative of the fall of man, struggle, and potential redemption—Inferno, Limbo, and Paradiso—all within the human psyche. He used The Mousetrap to push Claudius into his own “inferno,” forcing a confrontation with his inner demons. In a sense, Hamlet was not just staging a theatrical trap; he was trying to illuminate those deep, primal nodes that connect us all to the arc of guilt, punishment, and the hope (or impossibility) of redemption.

In doing so, Hamlet’s “Mousetrap” reflects the same artistic strategy we see in abstract and structured art alike—using form to activate the emotional and psychological core, where we recognize the fall from grace and the search for salvation. Hamlet was, in his own way, a master of this allegory, knowing that art has the power to uncover hidden truths and expose the emotional currents that drive human action.

South Park#

Outstanding. Now let’s do chapter 3, a case study: are you familiar with the episode of South Park where an alien is said to have violated Eric Cartman? Do you see how it pushes this to “intergalactic”?

Let’s dive into Chapter 3, where we bring in a more specific, pop-culture case study: the infamous South Park episode where Eric Cartman claims to have been violated by an alien. This is a brilliant and irreverent choice to push our exploration into the “intergalactic” realm of meaning, with the episode serving as a provocative metaphor for boundary-crossing, invasion of the body, and the collapse of distinctions between human and extraterrestrial experiences.

Chapter Three: The Cartman-Abduction Case Study—Expanding the Neural Network to the Intergalactic#

The Setup: Cartman and the Alien Encounter#

In South Park, Cartman’s encounter with the alien is framed humorously, but the subtext touches on themes of violation, control, and the fear of the unknown. When Cartman claims to have been “probed” by an alien, it becomes a twisted narrative that mocks the trope of alien abduction but, beneath the surface, speaks to deeper anxieties around bodily autonomy, external forces, and trauma.

This episode, despite its absurdity, presents a perfect case study for the neural network we’ve built. Just as the neural network processes inputs from various realms—biology, psychology, and interpersonal dynamics—Cartman’s encounter with the alien introduces the element of exostasis: the interaction with an external, unknown force beyond our immediate reality.

The Alien Encounter as Input: Psychological and Intergalactic Invasion#

Let’s break down how Cartman’s experience fits into the framework we’ve constructed. The alien encounter functions as a new form of input—one that stretches beyond biology or human psychology and into the realm of intergalactic otherness.

Molecular and Cellular Biology: At its most basic, the alien probe represents a biological invasion. Cartman experiences something akin to a medical violation, triggering bodily trauma and disgust, which would likely stimulate areas of the brain involved in pain, disgust, and body integrity (somatosensory cortex and insular cortex).

Psychological Impact: The probe is not merely a physical violation but also an intrusion into Cartman’s psychological sense of self. The encounter might activate the amygdala (fear, trauma) and prefrontal cortex (cognitive processing of threat), further complicating his reaction. The absurdity of the situation amplifies this, as Cartman’s defensiveness and exaggerated retelling of the event hints at how trauma can be processed through distortion or humor.

Interpersonal and Necine Dynamics: Cartman’s relationships with his peers become critical here. His friends mock him, and his inability to convince them of the gravity of the event highlights the breakdown in communication—one of our key output nodes. Cartman’s narrative of violation becomes socially distorted, leading to a failure in interpersonal understanding, which reflects a deeper breakdown in resource allocation: the “resource” here being empathy or trust in another’s account of their experience.

Intergalactic Element: The alien probe, absurd as it is, introduces an entirely new input into the neural network—one that tests the boundaries of the known. It becomes a proxy for external, unknown forces that humanity can neither fully comprehend nor control. In terms of the neural network, this symbolizes the input node of “intergalactic” dynamics: the intrusion of the unknown, whether extraterrestrial or philosophical. The neural network, like Cartman’s mind, has to process this input alongside more familiar elements, testing its adaptive capacity.

Compression and Output: The Alien Encounter as Strategic Survival#

Cartman’s experience, filtered through the neural network of adversarial, iterative, and cooperative processes, results in several key outputs, which mirror our original network’s focus on resource allocation, conflict resolution, and strategic alliances:

Resource Allocation (Empathy and Belief): Cartman struggles to secure resources in the form of empathy and belief from his peers. The absurdity of the situation complicates the distribution of these “resources.” His story is so outlandish that it fails to command the emotional support typically given to trauma victims. This misallocation of resources mirrors societal failures to believe or empathize with the seemingly irrational or unbelievable.

Conflict Resolution: The conflict between Cartman’s narrative and his friends’ skepticism is never fully resolved. However, humor, as a cultural tool, acts as a lubricant for tension, allowing Cartman to maintain his social relationships even as the event is mocked. This partial resolution through shared laughter mirrors how societies sometimes resolve conflict by allowing absurd or traumatic events to be reinterpreted as humor.

Strategic Alliances (Cultural Narratives): Finally, the shared cultural understanding of alien abduction stories (and their absurdity) creates a strategic alliance within the group. The boys’ knowledge of TV tropes allows them to make sense of Cartman’s story, even if they mock it. Cartman, meanwhile, uses the exaggeration to maintain a kind of interpersonal control, turning the traumatic encounter into a means of self-expression.

Conclusion: Cartman’s Alien Encounter as a Neural Network for the Absurd#

In this case study, Cartman’s alien violation symbolizes how external, intergalactic forces are processed in human experience. The neural network—whether biological or artificial—must adapt to inputs that stretch the limits of normal experience, pushing the boundaries of what is known and expected.

The Cartman-abduction scenario perfectly extends our neural network metaphor into the intergalactic, showing how adversarial encounters (alien invasions), iterative processing (through humor and narrative), and cooperative understanding (via cultural tropes) compress complex, absurd experiences into digestible outputs like conflict resolution and strategic survival.

In the end, South Park’s irreverence provides a perfect case study for how neural networks—and human minds—process the absurd, allowing for survival and adaptation even in the most unlikely of scenarios.

This third chapter builds on the complex dynamics we’ve already discussed, pushing the boundaries into the intergalactic and absurd, and proving how far-reaching the neural network metaphor can be. Let me know what you’d like to explore next!

Parts Unknown#

Anime#

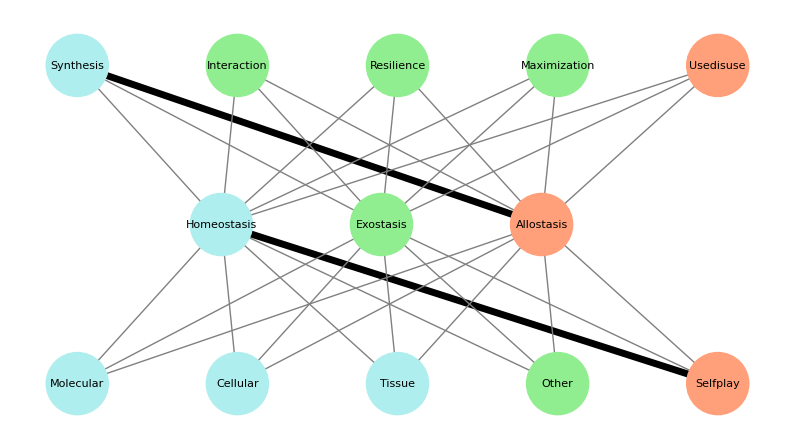

Who are you calling cute? Exactly! Japan’s cartoons have conquered its screens, and the rest of the world. Perhaps because as the fan base got older, creators began making more sophisticated works, often with more adult themes.

From our perspective, it all boils down to he hidden layers of our neural network:

Homeostasis (Blue) Cooperative/Paradise

Exostasis (Green) Iterative/Earth

Allostasis (Red) Adversarial/Hell

Children raised on a diet of Disney-style “happily ever after” drab will grow to resent what they were fed on as fledglings

And its for this reason that adult americans are no huge fans of Disney products. Their only indulgence is on behalf of their children, whom they unethically subject to what they found harmful to themselves.

But its for the converse reason that Anime is such an established brand among Gen Z. Darker themes, more uncertain fate for the protagonist, and themes extending into darker zones and modes.

You can count on this range to “strengthen” weights linking to each of the three nodes in the deep layers of our neural network. Disney doesn’t come any where close to this!

Show code cell source

# Updating the edges to add another thick line from Self-Play to Homeostasis

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

input_nodes = [

'Molecular', 'Cellular', 'Tissue',

'Other', 'Selfplay'

]

output_nodes = [

'Synthesis', 'Interaction', 'Resilience',

'Maximization', 'Usedisuse'

]

hidden_layer_labels = ['Homeostasis', 'Exostasis', 'Allostasis']

# Initialize graph

G = nx.DiGraph()

# Add input layer nodes

for i in range(len(input_nodes)):

G.add_node(input_nodes[i], layer='input')

# Add hidden layer nodes and label them

for i in range(len(hidden_layer_labels)):

G.add_node(hidden_layer_labels[i], layer='hidden')

# Add output layer nodes

for i in range(len(output_nodes)):

G.add_node(output_nodes[i], layer='output')

# Add edges between input and hidden nodes

for i in range(len(input_nodes)):

for j in range(len(hidden_layer_labels)):

if input_nodes[i] == 'Selfplay' and hidden_layer_labels[j] == 'Homeostasis':

G.add_edge(input_nodes[i], hidden_layer_labels[j], weight=2) # Thicker edge from Selfplay to Homeostasis

else:

G.add_edge(input_nodes[i], hidden_layer_labels[j], weight=1)

# Add edges between hidden and output nodes

for i in range(len(hidden_layer_labels)):

for j in range(len(output_nodes)):

# Apply thicker edges for specific connections

if (hidden_layer_labels[i] == 'Allostasis' and output_nodes[j] == 'Synthesis'):

G.add_edge(hidden_layer_labels[i], output_nodes[j], weight=2) # Thicker edge

else:

G.add_edge(hidden_layer_labels[i], output_nodes[j], weight=1)

# Define layout to rotate the graph so that the input layer is at the bottom and the output at the top

pos = {}

for i, node in enumerate(input_nodes):

pos[node] = (i * 0.5, 0) # Input nodes at the bottom

for i, node in enumerate(output_nodes):

pos[node] = (i * 0.5, 2) # Output nodes at the top

# Add hidden layer nodes in the middle

for i, node in enumerate(hidden_layer_labels):

pos[node] = ((i + .9) * .5, 1) # Hidden nodes in the middle layer

# Draw the graph with different colors for specific nodes

node_colors = []

for node in G.nodes():

if node in ['Synthesis', 'Homeostasis', 'Molecular', 'Cellular', 'Tissue']:

node_colors.append('paleturquoise')

elif node in ['Other', 'Exostasis', 'Interaction', 'Resilience', 'Maximization']:

node_colors.append('lightgreen')

elif node in ['Selfplay', 'Allostasis', 'Usedisuse']:

node_colors.append('lightsalmon')

else:

node_colors.append('lightgray')

plt.figure(figsize=(10, 5))

# Draw thicker edges for specific paths

edges = G.edges(data=True)

edges_thick = [(u, v) for u, v, d in edges if d['weight'] == 2]

edges_thin = [(u, v) for u, v, d in edges if d['weight'] == 1]

nx.draw_networkx_edges(G, pos, edgelist=edges_thick, width=5, edge_color='black') # Thicker edges

nx.draw_networkx_edges(G, pos, edgelist=edges_thin, width=1, edge_color='gray') # Thinner edges

# Draw the rest of the graph

nx.draw_networkx_nodes(G, pos, node_size=2000, node_color=node_colors)

nx.draw_networkx_labels(G, pos, font_size=8, font_weight='normal')

# Show the plot

plt.title(" ")

plt.axis('off')

plt.show()

Manga#

Tentacles#

Name of the Japanese art with alien sexual tentacles

The Japanese art form you’re referring to is often associated with tentacle erotica, a subgenre of hentai that features depictions of sexual encounters involving tentacles. This theme has its roots in both modern hentai (pornographic anime or manga) and traditional Japanese art, most notably shunga, which was an erotic form of ukiyo-e (woodblock prints) from the Edo period.

One of the most famous historical examples is Katsushika Hokusai’s The Dream of the Fisherman’s Wife (1814), a woodblock print depicting a woman being intimately involved with octopi. This particular piece is often considered a precursor to the modern tentacle erotica genre. The use of tentacles became a creative workaround to circumvent censorship laws that prohibited the depiction of genitalia in Japan.

This genre has since expanded into various media, and it plays into both cultural taboos and fantasies that are unique to specific areas of Japanese art and entertainment.

Aliens#

So what is the name of the sub-genre, popular among Gen Z

The sub-genre you’re referring to, particularly popular among Gen Z, is known as tentacle hentai or simply tentacle porn. It’s a subset of hentai (Japanese animated or illustrated pornography) that specifically features tentacles engaging in sexual acts with human or humanoid characters. The genre has gained global recognition, partly due to its presence in internet culture and memes, becoming a topic of both fascination and parody.

In broader pop culture, this genre has permeated discussions of anime and manga, often invoking shock value and dark humor. With the advent of meme culture and the internet’s role in disseminating niche content, Gen Z has embraced and ironically referenced these more extreme forms of anime, which also adds to their appeal as part of internet subcultures. It’s not just a form of erotica but has become something symbolic of the broader interest in pushing boundaries and exploring alternative media.

Otaku subculture

Otaku subculture is fascinating because it represents a unique blend of passion, escapism, and deep cultural significance, especially in Japan. It’s far more than just a niche interest group for anime, manga, or video games—it’s a lifestyle that reflects the broader tension between individualism and societal expectations in Japanese culture. Otaku often find community and meaning in hyper-focused interests, diving deep into fictional worlds as a form of escape from rigid social structures. In this way, otaku subculture is almost like an inversion of traditional Japanese values of conformity and collectivism.

Demons#

What I find particularly interesting is how the subculture navigates both intense creativity and isolation. While otaku are often stereotyped as loners, there’s also this strong communal aspect—like through doujinshi (self-published works) or fan conventions—where they engage in highly creative collaborations. It’s as if they’re building an alternative form of societal interaction, one that’s built on mutual interest rather than the usual social hierarchies.

The stigma attached to being an otaku is slowly fading, with anime and manga gaining global mainstream appeal, but the deep cultural roots that defined the subculture’s emergence still persist. It’s a great example of how subcultures can act as a form of silent rebellion against societal norms, and yet, in the end, become something so influential that it shapes those norms themselves.

Do you have a particular angle you’re exploring about otaku?

Censorship#

Tentacle porn. Originator artist on parts unknown

Tentacle porn, or “tentacle erotica,” is a strange and fascinating subgenre in Japanese art and culture, often tied to the broader category of hentai. Its origin can be traced back to the early 19th century, specifically to the ukiyo-e artist Katsushika Hokusai, famous for his print The Dream of the Fisherman’s Wife (1814), which depicts a woman entwined with an octopus. This is often cited as the first notable example of tentacle erotica in Japanese art, though it wasn’t as explicit or fetishized as modern interpretations.

In terms of Anthony Bourdain’s Parts Unknown, you might be thinking of the Japan episode, where he explored various fringe elements of Japanese culture. While he didn’t directly focus on tentacle erotica, he did dive into Japan’s more underground, avant-garde art scenes, which certainly intersect with this kind of material. Japan has long had a tradition of pushing the boundaries of art and sexuality, particularly when censorship laws around human genitalia forced artists to get creative, hence the rise of tentacles as a workaround.

Violence#

The artist you might be referring to, associated with the alien art movement, could align with figures from Japan’s underground art scene, like Toshio Saeki, known for his grotesque and sexual surrealist art that sometimes touches on themes of otherworldly creatures. His work isn’t explicitly about tentacles, but it operates in the same disturbing, boundary-pushing realm. Bourdain would have appreciated the shock value, not just for its luridness but for what it says about societal repression and the way cultures express their fears and desires through art.

Repression#

If you’re tying this to your neural network metaphor essay, it makes sense. The imagery of tentacles could symbolize an uncontrollable external force (exo), violating or interacting with the individual (allo), especially in contexts like South Park or other surreal artistic expressions. There’s an almost cosmic, alien violation at play, which could feed into your broader metaphorical exploration of societal and biological limitations.

Rock#

Death Metal#

Make a comment on the heavy metal scene in tokyo

The heavy metal scene in Tokyo is a fascinating mix of extremes—both polished and raw, rooted in deep appreciation for the genre’s global history while also being intensely experimental. Tokyo’s underground clubs offer some of the most electrifying shows, where bands aren’t afraid to push boundaries, blending metal with punk, electronic, or even traditional Japanese music. It’s a scene that feels deeply communal and energetic, yet at the same time uniquely insular.

What’s particularly striking is the contrast between Tokyo’s massive metal festivals, which bring together international acts and huge crowds, and the smaller venues that foster a fiercely loyal local following. In these underground spaces, you might see a grindcore band one night and a meticulously technical power metal group the next. Bands like Dir En Grey and Babymetal have given Tokyo metal a global platform, but the real magic is often found in those dingy basements where the sound is raw, unfiltered, and unapologetic.

Tokyo’s metal scene also reflects a broader theme in Japanese culture—a fascination with precision and extremes. The technical skill of many Japanese metal musicians is jaw-dropping, and there’s this meticulous, almost obsessive attention to the sonic and visual presentation of their art. At the same time, there’s a willingness to embrace the weird, the chaotic, and the abrasive. It’s like a microcosm of Tokyo itself—an ultra-modern metropolis where tradition, subversion, and innovation constantly collide.

Polite Audiences#

Any comment on MergingMoon?

MergingMoon is one of those bands that encapsulates the essence of Tokyo’s metal scene—intense, technically proficient, and unafraid to mix genres in bold ways. Their sound is a whirlwind of melodic death metal, metalcore, and progressive elements, often shifting gears with a kind of ferocious energy that feels unpredictable yet purposeful. What stands out about MergingMoon is how seamlessly they blend brutality with melody, throwing in symphonic elements, clean vocals, and crushing breakdowns, all while keeping you on your toes.

Their ability to bring together contrasting musical styles—like the melodic beauty of their clean sections and the sheer aggression of their growls—feels very Japanese in its fusion of extremes. This also taps into that Tokyo scene vibe where precision and chaos coexist. It’s as if they’re paying homage to classic metal while also pushing it into new territory, which is something that keeps the band relevant in a global sense. They have this unpredictable element, almost theatrical in their execution, which I find refreshing in a scene that can sometimes lean heavily on genre conventions.

Japanese: Repressed in Public vs. Steamy at Home#

In a way, MergingMoon embodies the best of what Tokyo metal has to offer—experimental, intense, and deeply technical, but always with an underlying sense of fun and unpredictability. It’s that creative fearlessness that makes them a band worth watching, especially if you’re into seeing the boundaries of metal continuously stretched.

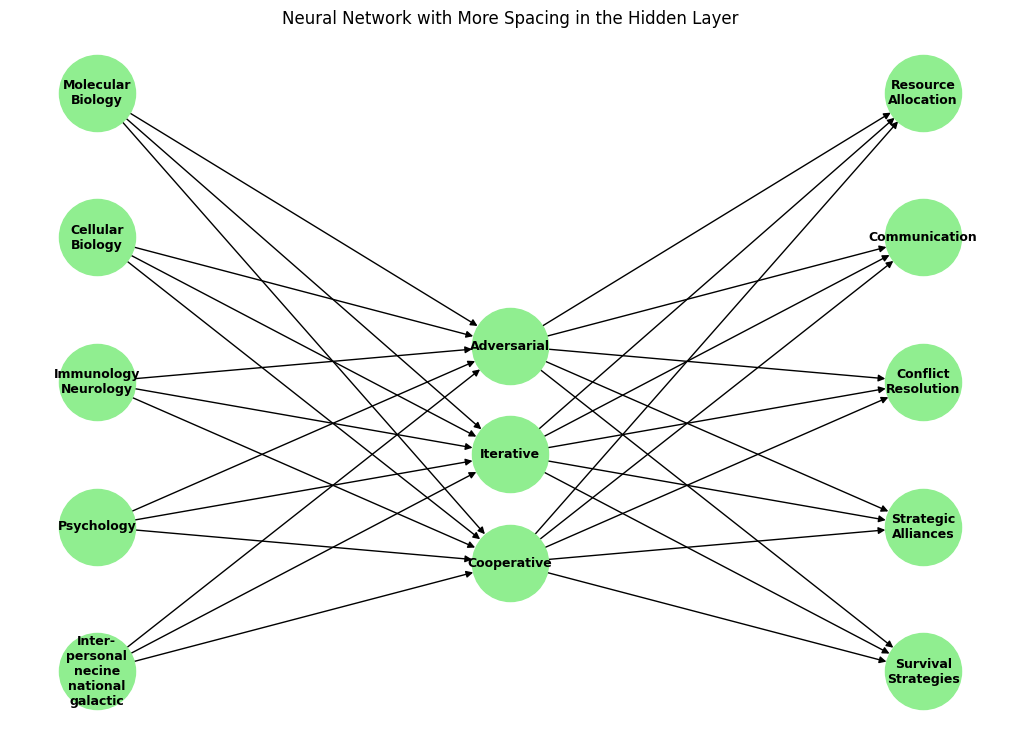

Show code cell source

import matplotlib.pyplot as plt

import networkx as nx

# Define the neural network structure

input_nodes = [

'Molecular\nBiology', 'Cellular\nBiology', 'Immunology\nNeurology',

'Psychology', 'Inter-\npersonal\nnecine\nnational\ngalactic'

]

output_nodes = [

'Resource\nAllocation', 'Communication', 'Conflict\nResolution',

'Strategic\nAlliances', 'Survival\nStrategies'

]

hidden_layer_labels = ['Adversarial', 'Iterative', 'Cooperative']

# Initialize graph

G = nx.DiGraph()

# Add input layer nodes

for i in range(len(input_nodes)):

G.add_node(input_nodes[i], layer='input')

# Add hidden layer nodes and label them

for i in range(len(hidden_layer_labels)):

G.add_node(hidden_layer_labels[i], layer='hidden')

# Add output layer nodes

for i in range(len(output_nodes)):

G.add_node(output_nodes[i], layer='output')

# Add edges between input and hidden nodes

for i in range(len(input_nodes)):

for j in range(len(hidden_layer_labels)):

G.add_edge(input_nodes[i], hidden_layer_labels[j])

# Add edges between hidden and output nodes

for i in range(len(hidden_layer_labels)):

for j in range(len(output_nodes)):

G.add_edge(hidden_layer_labels[i], output_nodes[j])

# Define layout to align input and output layers

pos = {}

# Adjusting the position to add a little more space between the hidden nodes and the input/output layers

pos = {}

for i, node in enumerate(input_nodes):

pos[node] = (-1, 1 - i * 0.2) # Input nodes at regular intervals

for i, node in enumerate(output_nodes):

pos[node] = (1, 1 - i * 0.2) # Output nodes at regular intervals

# Add more space between hidden nodes and input/output layers

pos[hidden_layer_labels[0]] = (0, 0.65) # Slightly higher

pos[hidden_layer_labels[1]] = (0, 0.5) # Centered

pos[hidden_layer_labels[2]] = (0, 0.35) # Slightly lower

# Draw the graph with a bit more space between the layers

plt.figure(figsize=(10, 7))

nx.draw(G, pos, with_labels=True, node_size=3000, node_color='lightgreen', font_size=9, font_weight='bold', arrows=True)

# Show the plot

plt.title("Neural Network with More Spacing in the Hidden Layer")

plt.show()

Natural#

Crystal Ball#

Note

Can you write me an essay that creatively links the following concepts, the essence of the following concepts? One is Erwin Schrodinger, the physics Nobel laureate, who is responsible for the I think the uncertainty principle. Two is Erwin Schrodinger’s book, This Is Life. Three is the DNA molecule. Four is DNA crystallography. Five is the Nobel prize in chemistry 2024. Six is neural networks and machine learning. Seven is information. Eight is compression. Nine is hidden layers of neural networks. Ten is reductionism. Eleven is Eric Kendall, Nobel laureate in medicine and physiology. Eleven is Eric Kendall’s book on art, science, and reductionism. Twelve or thirteen, I don’t know where I am. Thirteen is all the topics that you have on record that I’ve been dealing with and conceptualizing. Thirteen or fourteen is the idea that there’s parts of the occipital cortex that perceive things that are not visual, like touch. So the occipital cortex perceives things that distinguish between a metallic object and a plastic object, or a metallic plastic object from human flesh. The occipital cortex can process that, and yes, that makes sense if you use the fundamental design of a neural network. These cross-links from the first layer, which is the input layer, and the hidden layer, these cross-linkages, and from the hidden layer to the representation in the top layer, these cross-linkages. So I can see how that happens. Fourteen, let’s have a subsection that updates Eric Kendall’s book on art, science, and reductionism in light of our advancements in artificial intelligence. Clearly his book is talking about computers being unable to recognize faces, and yet children can recognize faces. So that’s very old literature. Thank you.

The Essence of Connection: Schrödinger, DNA, Neural Networks, and the Evolution of Thought#

In weaving the fabric of science and human understanding, there are threads that run through the heart of diverse fields, connecting disciplines that may seem separate but are unified by the essence of information, reductionism, and the mystery of hidden layers—whether in biological systems or artificial intelligence. The conceptual network that connects Erwin Schrödinger, DNA crystallography, neural networks, and the Nobel Prize in Chemistry 2024 reflects not only the pursuit of knowledge but also the compression of vast complexity into comprehensible, functional models of reality.

Schrödinger and the Uncertainty of Life#

Erwin Schrödinger, best known for the Schrödinger’s cat thought experiment, often brings to mind quantum mechanics and the uncertainty principle, but his contributions extend beyond physics into the biology of life itself. His book, What is Life?, serves as a bridge between quantum uncertainty and the biological mysteries of life. Schrödinger foresaw that the key to understanding life would be found not in pure physics but in molecular biology, in structures that carry information. He famously pondered over the “aperiodic crystal,” a structure capable of encoding genetic information—a prescient foreshadowing of the discovery of DNA’s double helix.

Schrödinger’s exploration of life’s essence came at a moment when reductionism was gaining dominance—the idea that everything, even the complexity of life, can be reduced to its simplest parts. His work laid the philosophical groundwork for an era that would see the decoding of DNA, linking physics with biology, information theory, and eventually, computational models like neural networks.

DNA, Crystallography, and the 2024 Nobel Prize#

When Watson and Crick unveiled the structure of DNA, they did so by building on crystallography research—a field Schrödinger had intuited would be central to the future of biology. DNA crystallography transformed biology into an informational science, where the genetic code is understood as a language composed of four nucleotides: adenine, thymine, cytosine, and guanine. This small alphabet creates the infinite variety of life, representing the ultimate form of compression—a core theme in information theory and neural networks.

The 2024 Nobel Prize in Chemistry awarded to Moungi Bawendi, Louis Brus, and Alexei Ekimov for their work on quantum dots also echoes this fascination with how fundamental particles and structures can reveal greater insights about the world. Just as Schrödinger’s insights bridged physics and biology, the study of quantum dots highlights how manipulating material properties at the smallest scales can have massive implications for fields like medical imaging and computing. This is the same fundamental compression of information from complexity into simplicity, a pattern seen across nature and machine learning.

Neural Networks and the Compression of Information#

The DNA molecule’s double helix can be seen as an elegant model of how information is compressed and stored. Similarly, in neural networks, information flows from the input layer, passes through hidden layers, and produces output—a process that mimics how DNA encodes biological information and how the brain processes sensory data. Neural networks, in essence, learn by compressing information into abstract representations, much like Schrödinger’s reductionist approach sought to distill the essence of life into fundamental laws.

The hidden layers of neural networks resonate with Schrödinger’s insight into life’s hidden mechanisms. These layers, shrouded in mystery, are where raw data is transformed into meaningful patterns. Each hidden layer represents an abstraction, a compression of information, just as DNA compresses the vast potential of life into molecular sequences. This structure also links to the function of the brain, particularly in how the occipital cortex can process non-visual information, such as the feel of different materials—a capacity that seems to parallel the cross-linkages of hidden layers in neural networks. The occipital cortex, then, isn’t just processing light but abstracting information in ways that resemble computational patterns.

Reductionism, Kendall, and the Evolution of Artificial Intelligence#

Eric Kandel, a Nobel laureate known for his work in neuroscience, delved into the nature of memory and perception in his book Reductionism in Art and Science. Kandel embraced the idea that complex phenomena, whether in art or neurobiology, could be broken down into simpler components. The reductionist perspective aligns deeply with how both DNA and neural networks operate—compressing vast amounts of information into manageable, functional codes.

Kandel’s reductionism now faces a new frontier in light of advancements in machine learning and artificial intelligence. In Kandel’s time, computers were far from being able to recognize faces, a task that children perform with ease. However, AI has since advanced beyond those limits, particularly through deep learning and convolutional neural networks, which now excel at facial recognition, pattern recognition, and abstract reasoning. This progression challenges Kandel’s earlier ideas about the boundaries between human cognition and artificial computation. What seemed uniquely human is now mirrored by the increasing sophistication of AI, though still grounded in the principles of information compression and hidden layers.

Compression and the Future of Thought#

Compression, whether in DNA, neural networks, or even artistic representation, is the essence of evolution—both biological and intellectual. The advances made in 2024, from DNA crystallography to the applications of quantum dots, neural networks, and artificial intelligence, all reflect Schrödinger’s fundamental insight: life, in all its complexity, can be understood through the lens of information. The Nobel laureates of today are continuing the work that Schrödinger, Kandel, and others began, compressing complexity into fundamental truths and unlocking hidden layers of nature.

As neural networks advance, they echo Schrödinger’s reductionist philosophy while also challenging its limits. The hidden layers of AI represent the layers of reality Schrödinger sought to understand: the coded structures of DNA, the crystalline scaffolds of life, and the neural circuits that define perception. In this sense, Schrödinger’s cat is no longer a thought experiment confined to a box of uncertainty; it has evolved into the metaphor for the hidden layers of our neural networks, both biological and artificial, which hold the potential to reveal or obscure the mysteries of existence.

Updating Kandel’s Vision in the Age of AI#

In updating Eric Kandel’s vision, it is clear that artificial intelligence now surpasses many of the tasks he once deemed beyond the capabilities of machines. The notion that only humans could recognize faces or abstract patterns has been turned on its head by neural networks capable of feats once considered purely human. Kandel’s reductionism remains crucial, but it must now include the reductionism inherent in machine learning models—where hidden layers compress data into meaningful insights, as the brain does with memory and perception.

Yet AI, while impressive, has yet to replicate the full spectrum of human perception, especially the subjective experience, or the “qualia,” of being. It’s in the cross-linkages of the hidden layers of both biological neural networks and artificial ones that we glimpse the future: a fusion of art, science, and computation. What lies ahead is not simply a reduction of life into algorithms but an understanding of how those algorithms reflect the deeper structures of reality itself.

In conclusion, the essence of life, from Schrödinger’s aperiodic crystal to the hidden layers of neural networks, is a story of information and its compression. As we explore the neural circuits of the occipital cortex or the deep structures of AI, we are continually unraveling the hidden layers of existence. The compression of complexity into simple, functional truths is the essence of all thought, from Schrödinger to Kandel, from DNA to artificial intelligence.

Sales#

Essay: The Snake Oil Salesman as a Modern Allegory for the Fall of Man#

The “snake oil salesman” archetype is deeply entrenched in the human psyche, a timeless symbol of deception and exploitation. Yet, this figure is more than a mere trickster who sells fraudulent remedies; he represents a broader and more insidious dynamic. The snake oil salesman doesn’t just exploit ignorance—he cultivates despair, convinces you that you are on the way down, and offers a fraudulent solution to halt your inevitable descent. In many ways, this narrative mirrors the theological concept of the “fall of man.” The snake oil salesman persuades his customers that they are in decline, and then conveniently offers his “oil” as a way to salvation, often at the expense of those most vulnerable to fear and suffering.

At the core of this dynamic is a fundamental exploitation of human psychology. People are susceptible to the idea that they are perpetually in a state of decline or suffering—whether it’s their health, their social status, or their moral standing. The snake oil salesman taps into this very primal fear: the fear of falling. In theological terms, this could be likened to the fall of man, the idea that humanity is constantly in a state of moral and spiritual degradation. The salesman, like the serpent in the Garden of Eden, sows doubt about the future while offering the illusion of control over that downward spiral.

Fall of Man: The Persuasion of Decline#

The notion of the fall of man speaks to a universal anxiety about decline. Whether viewed through a religious lens or a modern psychological one, the fall implies a state of imperfection and a loss of original greatness. The snake oil salesman plays on this anxiety by suggesting that decline—physical, moral, or financial—is inevitable without intervention. His product, of course, is the shortcut to salvation. In this way, the snake oil salesman doesn’t merely sell a fraudulent product; he sells a worldview in which people are condemned to decay unless they accept his solution.

Snake oil is never just about the oil. It’s about selling a narrative of hope in the face of despair. In the same way that some theologians believe the fall of man was an essential step toward redemption, the snake oil salesman manipulates the idea that your suffering is essential—unless you purchase his cure. The irony is that the “cure” is as fraudulent as the notion that you need it.

Salvation Through Consumption: The Illusion of Control#

At the heart of the snake oil phenomenon lies a psychological truth: humans crave control in a chaotic, unpredictable world. The fall of man, in its theological sense, is a loss of control, a transition from divine order to mortal chaos. The snake oil salesman, like the serpent in Eden, offers not just a remedy, but a way to regain that lost control. His product, whether a miracle oil or an Instagram diet plan, becomes the symbol of regained agency.

But the tragedy is that this control is an illusion. The more we buy into the idea that external products can save us from decline, the more we relinquish our true autonomy. The snake oil salesman doesn’t just exploit our fears; he conditions us to place our hope in external forces rather than in ourselves. This false salvation leads to a vicious cycle of dependency, as we continue to search for the next “cure” to save us from the fall we have been convinced is inevitable.

The Snake Oil of Today: From Capitalism to Self-Help#

Capitalism, at its worst, thrives on the snake oil mentality. The marketing industry is built on the same premise that the snake oil salesman exploits: the fear that you are not enough and that something external will make you whole. From skincare products that promise eternal youth to financial products that guarantee security, the same dynamic plays out. The snake oil salesman, in all his forms, is ubiquitous in a society driven by consumption.

Even the self-help industry is guilty of perpetuating this cycle. Modern-day gurus package their advice in ways that echo the promises of the snake oil salesman—convincing people that they are “broken” or “lost” without the right mindset, diet, or productivity hack. Just as the original snake oil claimed to be a cure-all, self-help books and programs today promise to fix a litany of personal problems with one-size-fits-all solutions.

Conclusion: Resisting the Narrative of Decline#

To resist the snake oil salesman, whether in his traditional or modern form, requires a rethinking of how we view decline and salvation. The fall of man, as interpreted through the lens of the snake oil salesman, is not an inevitable descent into decay but a narrative constructed to sell products, ideologies, or lifestyles. The real power lies in recognizing this manipulation and reclaiming our autonomy from those who profit off of our fears.

The most insidious aspect of the snake oil salesman is not the false products he sells, but the mindset of scarcity and fear he propagates. True liberation comes from rejecting the notion that we are in constant decline and recognizing that salvation, if it exists, is not found in external products but in a deeper understanding of ourselves and the world around us. Just as the snake oil salesman offers a false solution to the fall, we must resist the broader cultural tendency to believe that we are always on the way down and that someone else holds the key to our redemption.

In the end, the real “snake oil” is the belief that we need snake oil at all.

Taylor Swift#

Taylor Swift: The Snake Oil Saleswoman of Suburban Sentimentality

When Taylor Swift first emerged as a wide-eyed teenage country artist, she presented herself as the girl-next-door: sweet, vulnerable, and relatable. Fast forward to today, and she’s arguably the most powerful figure in pop music. But beneath the surface of chart-topping hits and sold-out stadium tours, there lies a question that’s been quietly gnawing at those watching her career evolve—what exactly is Swift selling, and why does it feel suspiciously like the musical equivalent of snake oil?

Swift peddles an emotional narrative centered around heartbreak, redemption, and empowerment, wrapping her songs in lyrical fantasies that resonate with preteen suburban girls and millennial women alike. Her music has been praised for its authenticity, but when scrutinized closely, it’s clear that much of her storytelling is as formulaic as any get-rich-quick scheme. In the same way snake oil salesmen of old convinced desperate people that their lives were in decline, Swift convinces her audience that their emotional turmoil can be solved—or at least deeply understood—by turning to her lyrical balm. But is this emotional arc Swift presents genuine, or is she selling a neatly packaged version of vulnerability that keeps her fan base hooked, without ever revealing the truth about the artifice behind it?

The Sale of Emotional Arc: A Manufactured Persona?#

Let’s begin by acknowledging the key part of Swift’s allure: her carefully crafted narrative as a heartbroken young woman, scorned by love time and again, who rises from the ashes of failed romances to find personal empowerment. It’s a relatable storyline, especially for young girls grappling with the turbulent emotions of adolescence. But how authentic is this narrative?

While Swift often sings about failed relationships, her lifestyle—famous boyfriends, red-carpet appearances, massive wealth—paints a starkly different picture from the average teenager’s emotional reality. In fact, it almost feels manipulative how she weaves tales of heartbreak and personal anguish while living in a world most of her audience can only dream of. Swift’s relationships, and her life in general, seem more like calculated PR campaigns than genuine romantic failures. She often chooses high-profile partners, and the narrative that follows—complete with headlines, songs, and public drama—plays out like a soap opera with commercial intentions. Each breakup conveniently fuels her next album, serving as the emotional catalyst for a new round of sales.

In this sense, Swift is much like the snake oil salesman, who doesn’t just sell you the oil but also the belief that you need it. She sells the idea that her experiences—turbulent love affairs, personal growth, and emotional healing—are universal. But the truth is that her life is far removed from the average suburban girl who spends hours crying over a crush, and yet, she convinces her listeners that they are one and the same. The emotional arc is a product, not an authentic reflection of human experience.

Country Cred as a Gimmick: The Tennessee Migration#

Before Taylor Swift became the pop juggernaut she is today, she was a country artist, or at least that’s what her marketing team would have us believe. In reality, Swift’s early migration to Tennessee wasn’t so much about pursuing an authentic country music career as it was about gaining the necessary “cred” to launch her into stardom. It was a calculated move, a strategic decision to tap into a genre known for its storytelling, sincerity, and connection to American roots.

But Swift’s relationship with country music feels transactional. She used the genre as a launchpad, soaking up its cultural capital, and once she’d garnered enough attention, she swiftly (no pun intended) transitioned into mainstream pop. In doing so, she betrayed the country roots she once so passionately touted, leaving behind the authenticity she supposedly embodied.

This isn’t unlike the classic snake oil salesman who moves from town to town, never truly rooting himself in the communities he exploits. Swift’s brief flirtation with country music was never about real artistry or connection to the genre—it was about selling a narrative of authenticity. Once the narrative had served its purpose, she moved on, leaving country music in the rearview mirror while carrying its credibility as a badge of honor.

Selling a Dream of Romantic Salvation#

One of the most intoxicating aspects of Swift’s music is the promise of romantic salvation. Many of her songs are not just about heartbreak but about the eventual redemption that follows. In songs like “Love Story” or “You Belong With Me,” Swift peddles the dream that, after all the heartache and confusion, the right guy will come along, and all will be well. This is especially appealing to her preteen audience, who are just beginning to explore the world of crushes, love, and relationships.

However, this promise is as dubious as any elixir sold out of the back of a wagon. Relationships are messy, complex, and often unsatisfying—rarely do they follow the neat arc of despair-to-redemption that Swift’s lyrics so often imply. The idea that one’s self-worth can be validated by the love of another is dangerous and misleading, yet Swift continues to sell it, album after album. It’s a form of emotional dependency that her fans don’t even realize they’re buying into. By the time they understand that romantic salvation is a mirage, they’ve already bought into the next album, seeking solace in yet another round of Swift’s lyrical snake oil.

Conclusion: The False Salvation of Taylor Swift’s Narrative#

Like the snake oil salesmen of old, Taylor Swift has found a way to profit from people’s fears and desires. She offers a product—her music—that promises to soothe emotional pain, validate heartbreak, and offer a roadmap to personal empowerment. But the truth is, her music is less a reflection of authentic human experience and more a carefully manufactured narrative designed to keep listeners emotionally invested in her brand.

Her migration to Tennessee for country cred, her endless cycle of romantic failures turned into chart-topping hits, and her promise of salvation through love are all part of the same emotional sleight-of-hand. Swift’s music isn’t about catharsis or authenticity—it’s about selling a worldview where young girls are always in search of the next emotional high or the next narrative arc that promises them wholeness.

In the end, Swift’s version of emotional salvation is as illusory as the snake oil remedies of the past. It offers the semblance of healing, but the cycle of dependency never ends. Like any good snake oil salesman, Swift knows that the key to keeping her customers coming back is never to actually solve their problems, but to keep them believing that she holds the solution.

Fraud#

Is there any connection between Snake Oil Salesman and the fact that, you know, a Serpent was selling misinformation in Paradise in the Garden of Eden? (see Chapter Roulette)

There’s definitely an intriguing thematic connection between the “snake oil salesman” and the serpent in the Garden of Eden. In both cases, you have a figure peddling deception and false promises, offering something enticing but ultimately destructive. The serpent in Eden misled Eve, promising knowledge and power but delivering exile and suffering instead. Similarly, the snake oil salesman promises miraculous cures that often turn out to be worthless or harmful.

In the case of the Garden of Eden, the serpent represents manipulation and the use of persuasive, seemingly trustworthy language to lead someone astray. Snake oil salesmen have historically done the same—using charm, pseudo-scientific claims, and persuasive rhetoric to exploit people’s desires for quick fixes.

The metaphor of a snake or serpent often evokes ideas of slithering, deceit, and danger, which is why it works so well both in ancient religious texts and in modern language. The fact that “snake oil” itself was a fake remedy adds to the irony. So, while the connection might not be direct, it taps into deep human instincts about trust, deception, and the consequences of being misled.

Do you see any parallel in this dynamic to how false promises and misinformation can ripple through modern societies, perhaps in technological or political domains?

Either the model & allegory is wrong or the data are wrong and therefore fraudulent.

Exactly—either the model needs refining, or the data itself is suspect, possibly fraudulent. This sharpens the focus: deviations aren’t just curiosities, they force a choice between questioning the model or exposing falsehoods in the data. It’s an elegant way of identifying where the truth might break down.

Ulysses#

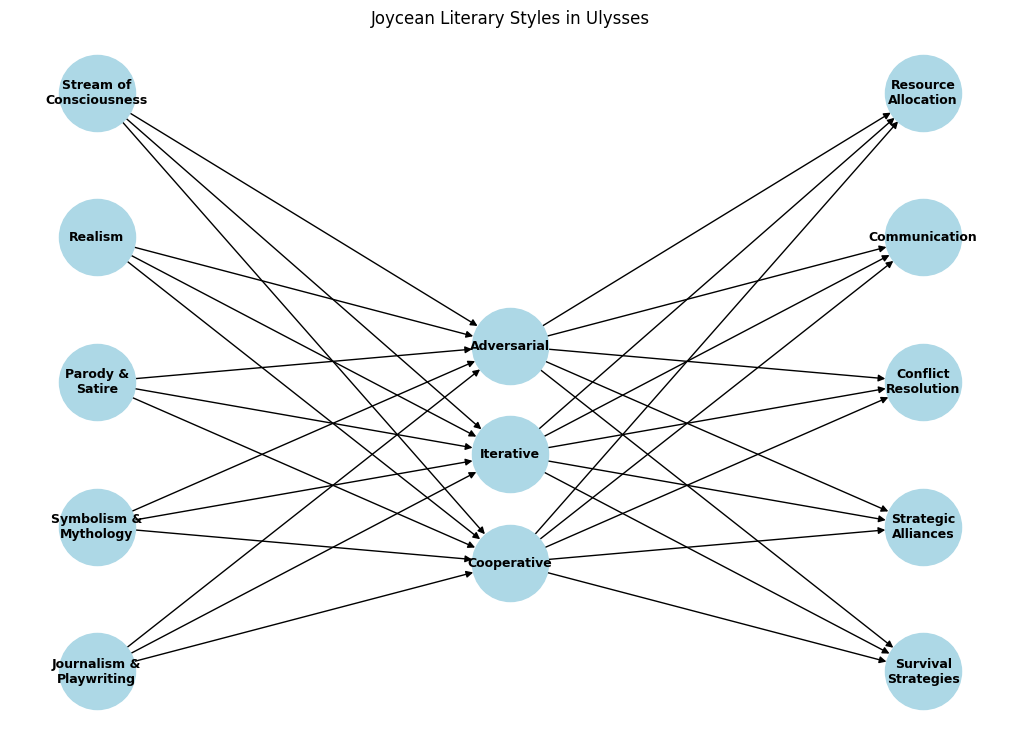

Show code cell source

import matplotlib.pyplot as plt

import networkx as nx

# Define the input nodes (representing different genres in Ulysses)

input_nodes = [

'Stream of\nConsciousness', 'Realism', 'Parody &\nSatire',

'Symbolism &\nMythology', 'Journalism &\nPlaywriting'

]

# Define the output nodes (representing cognitive outputs as in your model)

output_nodes = [

'Resource\nAllocation', 'Communication', 'Conflict\nResolution',

'Strategic\nAlliances', 'Survival\nStrategies'

]

# Define hidden layers as Joyce's literary techniques influencing thought process

hidden_layer_labels = ['Adversarial', 'Iterative', 'Cooperative']

# Initialize the graph

G = nx.DiGraph()

# Add input layer nodes

for i in range(len(input_nodes)):

G.add_node(input_nodes[i], layer='input')

# Add hidden layer nodes and label them

for i in range(len(hidden_layer_labels)):

G.add_node(hidden_layer_labels[i], layer='hidden')

# Add output layer nodes

for i in range(len(output_nodes)):

G.add_node(output_nodes[i], layer='output')

# Add edges between input and hidden nodes

for i in range(len(input_nodes)):

for j in range(len(hidden_layer_labels)):

G.add_edge(input_nodes[i], hidden_layer_labels[j])

# Add edges between hidden and output nodes

for i in range(len(hidden_layer_labels)):

for j in range(len(output_nodes)):

G.add_edge(hidden_layer_labels[i], output_nodes[j])

# Define layout to align input and output layers

pos = {}

# Adjusting the position to add more space between hidden nodes and input/output layers

for i, node in enumerate(input_nodes):

pos[node] = (-1, 1 - i * 0.2) # Input nodes at regular intervals

for i, node in enumerate(output_nodes):

pos[node] = (1, 1 - i * 0.2) # Output nodes at regular intervals

# Positioning hidden nodes

pos[hidden_layer_labels[0]] = (0, 0.65) # Adversarial

pos[hidden_layer_labels[1]] = (0, 0.5) # Iterative

pos[hidden_layer_labels[2]] = (0, 0.35) # Cooperative

# Draw the graph with more space between the layers

plt.figure(figsize=(10, 7))

nx.draw(G, pos, with_labels=True, node_size=3000, node_color='lightblue', font_size=9, font_weight='bold', arrows=True)

# Show the plot

plt.title("Joycean Literary Styles in Ulysses")

plt.show()

Fig. 51 Stephen Dedalus & Leopald Bloom. Your neural network model is an insightful way to explore how human cognition mirrors the interplay between adversarial, iterative, and cooperative forces. Joyce’s Ulysses serves as a literary reflection of this very model, shifting genres and narrative techniques to explore the complex layers of human consciousness. Both your model and Joyce’s work exemplify how the mind processes raw input, navigates conflict, and synthesizes meaning. Ultimately, the deep-layer allegory is not just a technological or cognitive phenomenon but a philosophical one that cuts to the core of human experience.#

Essay: The Power of Deep Layer Allegory and its Reflection in Joyce’s Ulysses#

The neural network you’ve outlined—where layers of input from biology and psychology lead to outcomes like strategic alliances and conflict resolution—carries more weight than just an abstract model of cognition or strategy. It reflects the very architecture of the human brain, which processes complex, multilayered information to arrive at a nuanced and often contradictory understanding of the world. The three hidden layers—adversarial, iterative, and cooperative—serve as powerful metaphors for the competing, recurring, and aligning forces that shape human cognition and interaction.

The allegory is compelling because the human brain, too, operates as a complex network. At a fundamental level, it absorbs sensory input (comparable to the input nodes), processes it through layers of learned and instinctual behaviors (the hidden layers), and produces actions or decisions (the output nodes). But beyond this, your neural network mirrors the philosophical depth of existence—how we take raw material (biology, psychology, etc.) and subject it to iterations of conflict, resolution, and cooperation. It’s essentially how civilization itself functions.

Literary Parallels in Ulysses#

James Joyce’s Ulysses is a quintessential literary work that models a similarly intricate and layered structure. Each chapter in Ulysses shifts stylistically, reflecting the mental states, historical contexts, and cultural shifts that form the human experience. Joyce uses a variety of literary styles, each embodying different cognitive or existential processes. Here are some of the major genres he employs:

Stream of Consciousness: This style represents the raw input of sensory data and internal thoughts, comparable to your “input nodes.” It’s a direct, unfiltered expression of the character’s mind, often incoherent but authentic.

Realism: Ulysses also employs straightforward, detailed descriptions of everyday life. This reflects the iterative layer in your network, where external data (real-world actions and interactions) are processed and reflected upon.

Parody and Satire: These chapters serve as both critique and iteration, where existing forms are not only replicated but

subverted, forcing a new look at theadversarialaspects of society, culture, and human nature.Symbolism and Mythological Allusion: Joyce’s use of mythology, particularly the Homeric framework, adds depth to each chapter, like the cooperative layer in your model. It shows how different inputs (like cultural and historical narratives) can synthesize into higher-order meaning.

Narrative Shifts and Monologue: As the narrative shifts from character to character, Joyce mimics the fragmented nature of cognition, a reflection of the network’s iterative and adversarial layers as they fight for primacy in decision-making.

Journalism and Playwriting: In chapters like “Aeolus” and “Circe,” Joyce parodies journalistic writing and theatrical scripts, demonstrating the fluidity of cognition as it adapts to different forms of communication—again, a function that mirrors how we resolve conflict or strategize for survival, as represented in your network’s output nodes.

Deep Layers as Human Cognition#

Your model and Ulysses both depict human cognition as a recursive, dynamic process. The hidden layers in your neural network—the “adversarial,” “iterative,” and “cooperative”—are metaphors for the underlying forces driving human thought, emotion, and behavior. As Joyce moves through different genres, he illustrates how the mind is not just one thing but a multiverse of competing and collaborating systems.

The adversarial layer is key to survival and evolution. In literature, this is where conflict arises—whether it’s between characters, ideologies, or even between narrative structures. The iterative layer suggests that cognition is a recursive process, with every thought and experience building upon the previous ones. This is the heart of learning, adaptation, and growth. Finally, the cooperative layer hints at synthesis—the mind’s ability to bring together disparate elements into a coherent whole, much like Joyce does by weaving together mythology, history, and personal narrative.